13. Character Sets and Encodings¶

There ain't no such thing as plain text.

— Spolsky/Atwood

Character sets and encodings are what we use to store and transmit text with computers, making them fundamental to most every application. The two terms are sometimes used interchangeably, but the difference is important.

While the character set declares what symbols are defined and in what order, the encoding declares its byte-level format in storage or during transit over a network. If we’ve created a message and want to actually read it again later, these details are just as significant as knowledge of the human language used to convey it. Therefore, we shall strive to:

Tip: on Text

Always declare an encoding when creating or handling textual data.

It does not make sense to have a string without knowing what encoding it uses.

— Joel Spolsky, Blogger/co-founder StackExchange

The point Misters Spolsky and Atwood have attempted to hammer home above, is that any piece of text with no encoding specified will soon have some or all of its meaning lost—later to be rendered as garbage.

Due to the complexities of human languages and historical reasons, this subject is more complicated than you might first imagine.

Hint: Missing Characters?

If symbols in the text below are not displayed correctly, you may need to install additional fonts described below.

13.1. History¶

The Internet has progressed from smart people in front of dumb terminals, to dumb people in front of smart terminals.

— Proverb

Building on the human need to communicate, the history of text encoding for communication purposes is quite colorful. A few of the most notable early advances are listed below:

Electrical Telegraph - early 1800s-1860, made long-distance text-based communication possible for the first time, supplanting the “Pony Express.”

Morse Code - 1830-1840’s,

a manual telegraph code.

S.O.S. –> ••• ––– •••

Braille (Version 2) - 1837,

the first binary form of writing,

designed for blind folks.

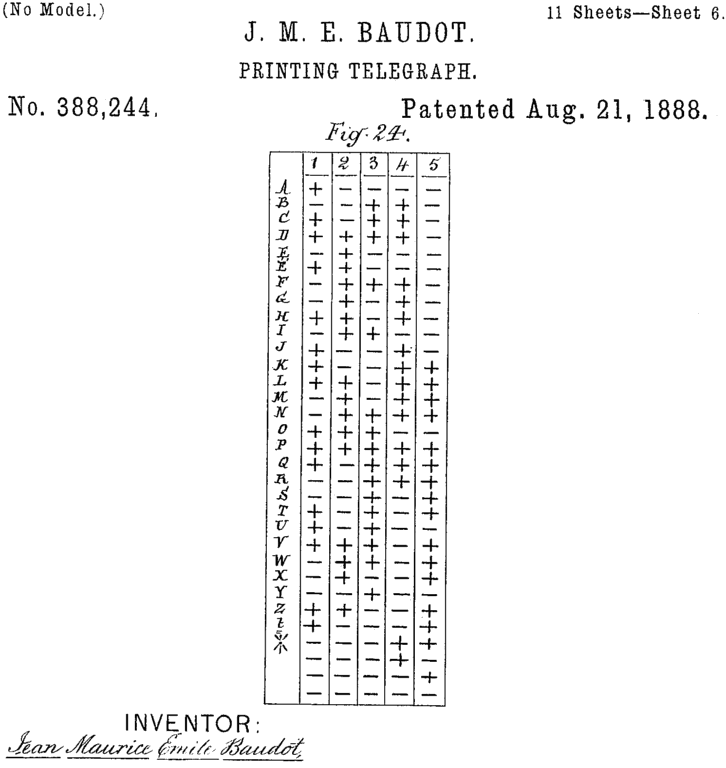

Baudot code (5-bit code) - 1870s, an automated (performed by machines) telegraph code.

Teletypes and Teleprinters - 1910s-1970s

Invented to speed communication and reduce costs—messages could be transmitted remotely without the need for operators trained in Morse code.

How did they work? Take a look. Rotary dial included!

In computing, especially in Unix-like operating systems, the legacy of teletypes lives on in the designations for serial ports and consoles (i.e., the text-only display mode), which have the prefix tty, such as /dev/tty5 for the fifth virtual console.

— linfo

EBDIC (a 6-bit code) - 1950s, a text encoding for early IBM mainframes; the only pre-ASCII encoding for computers you’ll find mentioned in modern times.

See also: Books

Code, by Charles Petzold

This nerdy book explores the early history of communications, writing systems, text encodings, number systems, basic electronics, logic gates, and introduces computer architecture to the beginner. Together, you’ll build a simple computer with the pieces both discovered and invented. Petzold’s enthusiasm draws you in, making for a thrilling read despite a subject matter some might consider dry. Recommended for the education of every computing professional that wants to know how it all actually works under the hood.

13.2. ASCII, a Seven-Bit Encoding¶

An encoding… with the unlikely pronunciation of ASS-key.

— Unknown (read the phrase somewhere, no help from Google)

____ .-'''-. _______ .-./`) .-./`)

.-, .' __ `. / _ \ / __ \ \ .-.') \ .-.') .-,

,-.| \ _ / ' \ \ (`' )/`--' | ,_/ \__) / `-' \ / `-' \ ,-.| \ _

\ '_ / | |___| / | (_ o _). ,-./ ) `-'`"` `-'`"` \ '_ / |

_`,/ \ _/ _.-` | (_,_). '. \ '_ '`) .---. .---. _`,/ \ _/

( '\_/ \ .' _ | .---. \ : > (_) ) __ | | | | ( '\_/ \

`"/ \ ) | _( )_ | \ `-' | ( . .-'_/ ) | | | | `"/ \ )

\_/``" \ (_ o _) / \ / `-'`-' / | | | | \_/``"

'.(_,_).' `-...-' `._____.' '---' '---'

Introduced in 1963 then updated in 1967 and 1986, ASCII (aka ISO 646) is the American Standard Code for Information Interchange, a ubiquitous standard for character encoding. Developed from automatic telegraph codes (no humans directly required, such as in Morse code), and printing teletype (tty) technology, it was defined as a seven-bit code, therefore able to encode 128 different values. ASCII was carefully designed, as was common in the days of acute resource constraints—each bit cost money in storage and in transit.

Centered around English text it contains the twenty-six letter basic Latin alphabet without diacritics, in upper and lower case (a shiny new feature), the numerals 0-9, the most common punctuation characters, and a number (32) of now largely obsolete printing control characters. It also introduced ordered alphanumeric symbols for convenient sorting.

Diving in!

Let’s take a closer look. Below ASCII is rendered as 8 columns of 16 rows (4-bits), with indexes in Decimal then Hex:

0 00 NUL 16 10 DLE 32 20 48 30 0 64 40 @ 80 50 P 96 60 ` 112 70 p

1 01 SOH 17 11 DC1 33 21 ! 49 31 1 65 41 A 81 51 Q 97 61 a 113 71 q

2 02 STX 18 12 DC2 34 22 " 50 32 2 66 42 B 82 52 R 98 62 b 114 72 r

3 03 ETX 19 13 DC3 35 23 # 51 33 3 67 43 C 83 53 S 99 63 c 115 73 s

4 04 EOT 20 14 DC4 36 24 $ 52 34 4 68 44 D 84 54 T 100 64 d 116 74 t

5 05 ENQ 21 15 NAK 37 25 % 53 35 5 69 45 E 85 55 U 101 65 e 117 75 u

6 06 ACK 22 16 SYN 38 26 & 54 36 6 70 46 F 86 56 V 102 66 f 118 76 v

7 07 BEL 23 17 ETB 39 27 ' 55 37 7 71 47 G 87 57 W 103 67 g 119 77 w

8 08 BS 24 18 CAN 40 28 ( 56 38 8 72 48 H 88 58 X 104 68 h 120 78 x

9 09 HT 25 19 EM 41 29 ) 57 39 9 73 49 I 89 59 Y 105 69 i 121 79 y

10 0A LF 26 1A SUB 42 2A * 58 3A : 74 4A J 90 5A Z 106 6A j 122 7A z

11 0B VT 27 1B ESC 43 2B + 59 3B ; 75 4B K 91 5B [ 107 6B k 123 7B {

12 0C FF 28 1C FS 44 2C , 60 3C < 76 4C L 92 5C \ 108 6C l 124 7C |

13 0D CR 29 1D GS 45 2D - 61 3D = 77 4D M 93 5D ] 109 6D m 125 7D }

14 0E SO 30 1E RS 46 2E . 62 3E > 78 4E N 94 5E ^ 110 6E n 126 7E ~

15 0F SI 31 1F US 47 2F / 63 3F ? 79 4F O 95 5F _ 111 6F o 127 7F DEL

These eight columns were called “sticks” in the official docs.

The 4-bit columns were meaningful in the design of ASCII. The original influence was binary-coded decimal and one of the major design choices involved which column should contain the decimal digits. ASCII was very carefully designed; essentially no character has its code by accident. Everything had a reason, although some of those reasons are long obsolete.

— kps on HN

Below is a different view, in order to make a few relationships more obvious. Four columns of thirty-two (5 bits at a time) in binary are shown with the first two bits separated by a hyphen to visually separate them:

Group 00 01 10 11

-------------- ------------ ------------ ------------

00-00000 NUL 01-00000 10-00000 @ 11-00000 `

00-00001 SOH 01-00001 ! 10-00001 A 11-00001 a

00-00010 STX 01-00010 " 10-00010 B 11-00010 b

00-00011 ETX 01-00011 # 10-00011 C 11-00011 c

00-00100 EOT 01-00100 $ 10-00100 D 11-00100 d

00-00101 ENQ 01-00101 % 10-00101 E 11-00101 e

00-00110 ACK 01-00110 & 10-00110 F 11-00110 f

00-00111 BEL 01-00111 ' 10-00111 G 11-00111 g

00-01000 BS 01-01000 ( 10-01000 H 11-01000 h

00-01001 HT 01-01001 ) 10-01001 I 11-01001 i

00-01010 LF 01-01010 * 10-01010 J 11-01010 j

00-01011 VT 01-01011 + 10-01011 K 11-01011 k

00-01100 FF 01-01100 , 10-01100 L 11-01100 l

00-01101 CR 01-01101 - 10-01101 M 11-01101 m

00-01110 SO 01-01110 . 10-01110 N 11-01110 n

00-01111 SI 01-01111 / 10-01111 O 11-01111 o

00-10000 DLE 01-10000 0 10-10000 P 11-10000 p

00-10001 DC1 01-10001 1 10-10001 Q 11-10001 q

00-10010 DC2 01-10010 2 10-10010 R 11-10010 r

00-10011 DC3 01-10011 3 10-10011 S 11-10011 s

00-10100 DC4 01-10100 4 10-10100 T 11-10100 t

00-10101 NAK 01-10101 5 10-10101 U 11-10101 u

00-10110 SYN 01-10110 6 10-10110 V 11-10110 v

00-10111 ETB 01-10111 7 10-10111 W 11-10111 w

00-11000 CAN 01-11000 8 10-11000 X 11-11000 x

00-11001 EM 01-11001 9 10-11001 Y 11-11001 y

00-11010 SUB 01-11010 : 10-11010 Z 11-11010 z

00-11011 ESC 01-11011 ; 10-11011 [ 11-11011 {

00-11100 FS 01-11100 < 10-11100 \ 11-11100 |

00-11101 GS 01-11101 = 10-11101 ] 11-11101 }

00-11110 RS 01-11110 > 10-11110 ^ 11-11110 ~

00-11111 US 01-11111 ? 10-11111 _ 11-11111 DEL

Note: Each column above differs only in the first two bits (from the left). This form makes the relationships between the control characters and letters more apparent across rows. By default on a traditional keyboard, the first two bits of each character are set to one, in most cases producing a lower case letter (last column). Also:

The Ctrl key clears the first two bits, producing the “control character” corresponding to the left of the letter.

The Shift key clears the second bit producing an uppercase character (in most cases), simplifying “case-insensitive character matching and construction of keyboards/printers.” This is why the cases have a difference of thirty-two. For example in Python:

>>> ord('a') - ord('A') 32 >>> ord('z') - ord('Z') 32

Note the Delete key is all ones, which effectively “rubs out” a character on paper tape or punch card. In fact, early keyboards labeled it as “Rubout.”

Modern keyboards are a bit more complicated, but this explains several design cues of ASCII. One final view up next. If we factor the last five bits in common to each row, the relationships are further clarified:

Last-5 00 01 10 11 ← First 2 bits

↓

00000 NUL SP @ `

00001 SOH ! A a

00010 STX " B b

00011 ETX # C c

00100 EOT $ D d <-- End transmission

00101 ENQ % E e

00110 ACK & F f

00111 BEL ' G g <-- Bell

01000 BS ( H h <-- Backspace

01001 TAB ) I i <-- Tab

01010 LF * J j

01011 VT + K k

01100 FF , L l <-- Form feed

01101 CR - M m <-- Carriage return

01110 SO . N n

01111 SI / O o

10000 DLE 0 P p

10001 DC1 1 Q q

10010 DC2 2 R r

10011 DC3 3 S s

10100 DC4 4 T t

10101 NAK 5 U u

10110 SYN 6 V v

10111 ETB 7 W w

11000 CAN 8 X x

11001 EM 9 Y y

11010 SUB : Z z

11011 ESC ; [ { <-- Escape

11100 FS < \ |

11101 GS = ] }

11110 RS > ^ ~

11111 US ? _ DEL

Courtesy, user soneil at HN :

If you think of each byte as being 2 bits of “group” and 5 bits of “character”:

00 11011 is Escape 10 11011 is [

The Ctrl key sets the first two bits to zero, eh? 🤔 All of a sudden these keyboard combinations make a lot more sense, no? Mind, blown.

Ctrl+D - EOT - End transmission

Ctrl+G - BEL - Ring bell

Ctrl+H - BS - Backspace

Ctrl+I - TAB - Tab

Ctrl+L - FF - Form feed/Clear screen

Ctrl+M - CR - Carriage return

Ctrl+[ - ESC - Escape

See also: Moar History

Lots of additional juicy details may be found in the works below:

Things Every Hacker Once Knew, Eric S. Raymond

Inside ASCII , Part II , Part III , Interface Age Magazine (Awesome!)

Windows Command-Line: Backgrounder, history and Console modernization strategy.

13.3. Eight-bit Encodings¶

⣿

As desktop computers increasingly outnumbered those in laboratories during the 1980s (the Personal Computer revolution woot!) demand for characters in languages other than English skyrocketed. It wasn’t long before extended 8-bit variants based on ASCII, (such as legendary IBM code-page 437) were being whipped up left and right to support the plethora of languages used around the world. There was also quite a bit of space allocated to dialog box drawing characters on IBM and Commodore computers of the era. An intermediate step on the way to communication Nirvana.

While the strategy mostly worked, there were some significant drawbacks.

On some PCs the character code 130 would display as é, but on computers sold in Israel it was the Hebrew letter Gimel (ג), so when Americans would send their résumés to Israel they would arrive as rגsumגs.

— Joel Spolsky, Blogger/co-founder StackExchange

Only one language could be used in a particular document, and often per system at a time.

Document interchange was difficult, as different “code pages” needed be swapped in and out to view them.

Lack of industry-wide standards made documents non-interoperable with other operating systems and often versions.

Fonts often needed to be installed, without fallbacks.

While there were hundreds of these encodings, two of the most common eight-bit character encodings are detailed below, as you might still encounter them in the wild. (Older encodings particular to your locale may be found as well.)

ISO 8859-## (aka latin1)

§

The most popular text encodings used on the early web, “Web 1.0,” during the interim between ASCII and Unicode were the ISO/IEC 8859 series. Separated into parts, ISO 8859-1 to 15, they support many European and Middle-Eastern languages based on the Latin, Cyrillic, Greek, Arabic, Hebrew, Celtic, and Thai scripts.

Windows-1252 and Others

‡

Sometimes erroneously called ANSI format , Windows-1252 and other “code pages” substantially overlap with ISO 8859 series mentioned above.

They differ mainly in the removal of a block of rarely-used extended control characters, called C1 . Microsoft decided to replace them with a few perhaps more useful characters such as curly quotes and later the Euro (€) symbol, though this lead to further incompatibilities.

Of course these 8-bit encodings (with space for up to 256 characters) were never able to handle the Asian writing systems (CJKV ) that have thousands of symbols . When the Internet (specifically the world-wide web) exploded it soon became clear that swapping code-pages was a glaring dead-end.

Luckily, Unicode had already been invented and folks started noticing. With the success of Windows XP in 2001, Unicode had hit its stride and was finally being used by “the masses.”

13.4. DBCS¶

…or double-byte character sets are traditionally fixed-length encodings taking up 16-bits of space per character (with space for 65 thousand glyphs), necessary for CJKV (aka East-Asian) writing systems. Notably:

Big5 for traditional Chinese

GB Series for simplified Chinese

ShiftJIS for Japanese

The term DBCS is now somewhat obsolete, with “double” being swapped for “multiple.” These encodings, while once popular are in decline due to the emergence of Unicode. Another intermediate step on the way to…

13.5. Unicode¶

It’s hard to say anything pithy about Unicode that is entirely correct.

— John D. Cook, Why Unicode is subtle

�

Unicode is an ambitious attempt to catalog all the symbols used by humans, currently and throughout history into a single reference, called the Universal Character Set. Why everything? So that all text written in any language can be exchanged anywhere. The goal is a complete solution to symbolic communication between humans. This is a much larger job than often realized due to the complexities of the worlds writing systems, and why it is still augmented and improved regularly, over twenty-five years after its first release in 1991.

Universal Character Set (UCS)

Unicode is like a big database of characters and properties and rules, and on its own says nothing about how to encode a sequence of characters into a sequence of bytes for actual use by a computer.

— James Bennett

Note that the UCS is not concerned with storage formats, we shall deal with that shortly. Rather, the catalog of symbols is indexed by what are called code points—simply a list of positive integers with matching metadata such as a standard name and type attributes (letter, number, symbol…), lined up in space. One might think of them like the number-line you learned in math class in elementary or middle school.

“Sixteen Is Not Enough”

Despite a common early misconception that Unicode was limited to 16 bits or 65k characters, it is not. Currently Unicode allows for 17 planes of 65,536 possible characters, for a total of 1 million (1,114,112) possible characters. The current standard as of this writing, Unicode version 12.0 (2019) contains a total of 137,929 characters assigned.

13.5.1. Planes and Blocks¶

As mentioned, Unicode consists of seventeen planes i.e., of existence. Each plane is further divided into blocks, containing the following (highlights):

Basic Multilingual Plane (BMP)

The first plane is designed around backwards compatibility and tries to pack in everything practical that’s possible to fit. Blocks enshrined in history:

ASCII, as “Basic Latin”

ISO 8859-1 (the top half), as “Latin 1 Supplement”

It also contains:

Most modern languages (with non-huge numbers of codepoints)

Almost all common symbols, punctuation, currency

Mathematical operators and most symbols

Dingbats, geometric, and box-drawing symbols

Supplementary Multilingual Plane (SMP)

The second plane contains:

Historical scripts:

Ancient Greek

Egyptian Hieroglyphs

South, Central, and East Asian scripts.

Additional math and music notation (some historical)

Game symbols (cards, dominoes)

Emoji and other picto-graphics (food, sports)

Supplementary Ideographic Plane

Additional and historic Asian (CJKV) ideographs.

– 13. Unassigned Planes

Special-Purpose Plane

Currently contains non-graphical characters.

& 16. Private Use Area

Available for character assignment by third parties. Icon fonts such as FontAwesome often use these, however it uses the smaller private block under BMP 0.

13.5.2. Encodings¶

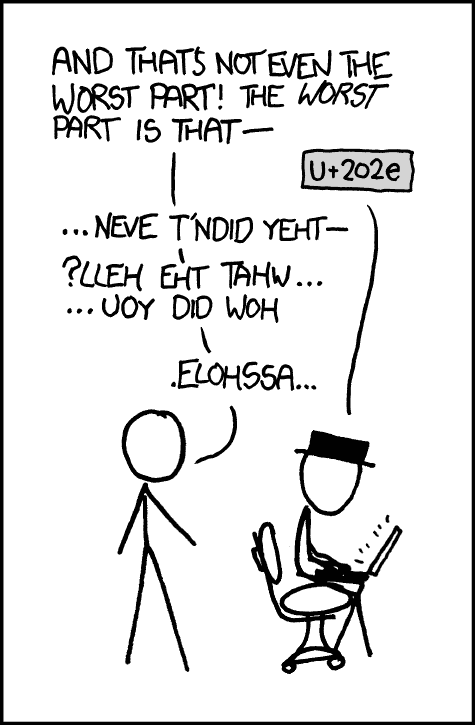

Fig. 18 Shit just got real–Dora the Exploder version.¶

Encodings are where the “rubber hits the road,” so to speak, moving from the theoretical to concrete. In other words, we need to convert the symbols in our catalog into bits in memory, on disk, or traveling over the ‘net. How do we do that? With encodings.

Early on it was assumed that since eight bits wasn’t enough (it wasn’t), we should double that to sixteen. As any self-respecting geek knows, that does a lot more than double the number of slots available, it makes enough room for 65 thousand plus characters! (2¹⁶ = 65,536) To someone in the West that probably sounds like an incredible amount of space for symbols, on the order of perhaps-even-too much? I mean, we’ve been limping along with 256 at a time so far, right gramps?

Well… no. Not long afterwards someone realized that Chinese (actually CJKV) alone has on the order of roughly 100,000 characters! ((boggle)) Though most are obscure, historical, variants, and/or not regularly used. Still, sixteen is not nearly enough when we consider all the other things we’d want in a universal character set.

Definitions

The names for Unicode encodings are lame if you ask me, and not very helpful in describing what they are. Let’s clarify a couple of concepts first.

- Fixed-length encodings:

So far we’ve studied only fixed-length encodings. That means each character is defined with a fixed number of bits, and that never changes, period. e.g.:

Baudot code is 5 bits per character.

ASCII is 7 bits per character.

ISO 8859 is 8 bits per character

This design has some helpful properties:

It’s easy to measure the length of a string.

You can start reading anywhere in the string and get a correct sub-string.

One can slice strings without worry.

The main drawback is that, if we increase the number space by blindly adding more bits then a lot of it will be wasted for simple strings that didn’t need it before. i.e.: Frequently-used text is now taking two bytes of space per character, where it used to take only one.

- Variable-length encodings:

Save space, hooray! Variable is accomplished using only one byte of space with frequently-used text, but two or more bytes when dealing with the less frequently-used. Using a special character designated as an escape code, an additional byte can be added each time we run out of space, as the encoding moves from the essential to obscure.

This design has helpful properties as well:

Frequently-used chars are compact.

Medium-usage chars are medium.

Less frequently-used chars are possible, the sky is the limit.

Great, but variable length encodings have drawbacks as well—losing the helpful features of the fixed-length encoding:

Counting length requires inspecting the whole string.

Slicing the string or reading a sub-string becomes problematic.

There are ways to program around these issues, but they require additional processor time and perhaps memory too.

With those concepts out of the way, let’s look at the types of Unicode encodings.

- UCS (fixed, deprecated):

Confusingly named after the Universal Character Set (when they thought these would be the only encodings), these are the original fixed-length encodings of Unicode in the tradition of ASCII and ISO 8859. They are given a suffix denoting the number of bytes allocated per symbol, e.g.:

UCS-2 (2 bytes, 16 bits, ~65k chars)

UCS-4 (4 bytes, 32 bits [actually fewer at 21], ~1.2M chars)

- UTF (variable):

Designated the “Unicode Transformation Format,” (huh?) these encodings are variable-length and came later. They are given a suffix denoting the number of bits (sigh) allocated per symbol, e.g.:

UTF-8 (1-4 bytes)

UTF-16 (2-4 bytes)

UTF-32 (4 bytes, 32 bits [actually limited to 21])

All three can encode the full ~1.1M character space.

The reason there is less than the full bits of space in the encodings is that the “escape sequences” described earlier (to add additional bytes) prevent the use of several blocks of addresses, each block larger than the last.

Variable-size encodings have come to dominate over time, as the drawbacks in processing speed have been outweighed by storage and transfer concerns, especially as computers have increased in processing power every year. Bottom line—UCSs are out and deprecated, while UTFs are in! Let’s take a look at how that happened.

13.5.2.1. UCS-2 –> UTF-16¶

The “First Stab” at the Problem, aka UCS-2

The first encoding of Unicode, UCS-2, was released in 1991. This is where the misconception started that Unicode was sixteen-bit—only and limited to it. Indeed UCS-2 is limited to it, and only capable of encoding the Basic Multilingual Plane (BMP). It was later deprecated for this reason.

Early implementors of Unicode that implemented UCS-2 included:

Windows NT

Visual Studio, Visual Basic

COM, etc.

Joliet file system for CD-ROM media (an ISO 9660 extension)

Java, The Programming language

JavaScript, The Programming language

Windows, Java, and JavaScript were later upgraded to use…

UTF-16

Is the variable-length version of UCS-2 created for Unicode 2.0 in 1996. It uses 16-bit values by default but can expand to 32 to express the upper planes when needed utilizing what is called, “surrogate pairs”. This makes it the most efficient Unicode encoding of Asian languages such as Chinese, Japanese Kanji, and Korean Hanja (CJKV), as common characters take only two bytes.

Unfortunately, this encoding doubles the size of formerly eight-bit strings that fit in ISO 8859.

Also, the need for UTF-16’s “surrogate pairs,” in characters above the first 16-bits is what limits the Unicode standard to ~1.1 million codepoints.

Endianness and BOM

Due to the fact that each character uses more than one byte, Endianness becomes an issue for both encoders and decoders. This may be specified by prepending either of the characters below as a tip to the decoder:

Byte Order Mark (BOM), U+FEFF

Non-character value, U+FFFE

It may also be stated explicitly as the encoding type: UTF-16BE or UTF-16LE. When using these encodings, do not prepend a BOM.

13.5.2.2. UCS-4 –> UTF-32¶

UCS-4 was never officially released, but was repackaged as UTF-32 and introduced with Unicode 2.0 as well. If you thought UTF-16 was wasteful, you ain’t seen nothin’ yet. UTF-32 is markedly wasteful of space, utilizing a full four bytes of memory for each character, whether needed or not, mostly not (since due to other compatibility rules twenty-one bits are the most it could potentially use anyway).

However, when memory usage is not an issue, it can be useful to use UTF-32 to boost text processing speed. This is due to the fact that, remember back to fixed-length encodings, every character is the same size. They are directly addressable so can be accessed efficiently (in constant time) as you would an array. The presence of combining characters can reduce these advantages, however. Endianness is a factor here as well.

Analysis

We’ve got choices of UTF-16 and UTF-32 now from Unicode 2.0, but they’re still not so compelling are they? As this amusing take by Joel on Software illustrates:

For a while it seemed like that might be good enough, but programmers were complaining. “Look at all those zeros!” they said, since they were Americans and they were looking at English text which rarely used code points above U+00FF. Also they were liberal hippies in California who wanted to conserve (sneer). If they were Texans they wouldn’t have minded guzzling twice the number of bytes. But those Californian wimps couldn’t bear the idea of doubling the amount of storage it took for strings.

There must be a better way, hah. Moving on.

13.5.2.3. UTF-8 FTW, Baby!¶

UTF-8 was designed, in front of my eyes, on a placemat in a New Jersey diner one night in September or so, 1992.

— Rob Pike

Fortunately some smart people were thinking the same thing. Finally the problem landed in the lap of Ken Thompson , who designed it with Rob Pike “cheering him on.” Yes, that Ken and Rob, creators of Unix:

What happened was this. We had used the original UTF (16) from ISO 10646 to make Plan 9 support 16-bit characters, but we hated it. We were close to shipping the system when, late one afternoon, I received a call from some folks, I think at IBM, who were in an X/Open committee meeting. They wanted Ken and me to vet their file-system safe UTF design. We understood why they were introducing a new design, and Ken and I suddenly realized there was an opportunity to use our experience to design a really good standard and get the X/Open guys to push it out. We suggested this and the deal was, if we could do it fast, OK. So we went to dinner, Ken figured out the bit-packing, and when we came back to the lab after dinner we called the X/Open guys and explained our scheme. We mailed them an outline of our spec, and they replied saying that it was better than theirs, and how fast could we implement it?

The design turned out to have a number of very useful properties.

Backward Compatibility

UTF-8 is a variable-length encoding that can store a character using one to four bytes (shortened from six when Unicode was limited to twenty-one bytes in 2003). It is designed for backward compatibility with ASCII, as every code point from 0-127 is stored in a single byte. Additionally, it’s compatible with program code that use a single null character (of 0) to terminate strings.

¡Every ASCII file is also valid UTF-8! ¡Arriba! !Arriba! 😍

How does it work? Like this, the underscore “ˍ” character below representing a container for a significant bit value:

Avail. Bits |

First |

Last |

Byte 1 |

Byte 2 |

Byte 3 |

Byte 4 |

7 |

U+0000 |

U+007F |

0ˍˍˍˍˍˍˍ |

|||

11 |

U+0080 |

U+07FF |

110ˍˍˍˍˍ |

10ˍˍˍˍˍˍ |

||

16 |

U+0800 |

U+FFFF |

1110ˍˍˍˍ |

10ˍˍˍˍˍˍ |

10ˍˍˍˍˍˍ |

|

21 |

U+10000 |

U+10FFFF |

11110ˍˍˍ |

10ˍˍˍˍˍˍ |

10ˍˍˍˍˍˍ |

10ˍˍˍˍˍˍ |

As Wikipedia describes well:

The first 128 characters (US-ASCII) need only one byte. The next 1,920 characters need two bytes to encode, which covers the remainder of almost all Latin-script alphabets, and also Greek, Cyrillic, Coptic, Armenian, Hebrew, Arabic, Syriac, as well as Combining Diacritical Marks. Three bytes are needed for characters in the rest of the Basic Multilingual Plane, which contains virtually all characters in common use[11] including most Chinese, Japanese and Korean characters. Four bytes are needed for characters in the other planes of Unicode, which include less common CJKV characters, various historic scripts, mathematical symbols, and emoji (pictographic symbols).

Self-Synchronization

The length of the multi-byte UTF-8 sequences is easily determined just by looking at their prefix codes:

Initial byte prefix, one of:

0ˍˍˍˍˍˍˍ11ˍˍˍˍˍˍ

Continuation byte prefix:

10ˍˍˍˍˍˍ

Note in the table above, that the number of ones at the start of the first byte gives the number of bytes of the whole character (when not 0). That’s a great property when looking at the encoded text in a hex editor, or when writing decoders or other bit-twiddling programs.

Due to the fact that continuation bytes do not share the same prefix with the initial byte of a character sequence means that a search will not find a character inside another. This property makes slicing and dicing UTF-8 strings much less worrisome.

Last, but not least UTF-8 is not byte-order dependent due to the fact it is byte oriented.

Auto-detection and Fallback

In addition to complete ASCII support, there is also partial ISO 8859/Windows-1252 support as well. When given “extended” text by mistake, the UTF-8 decoder can recognize and fix it in many cases, due to the fact that most higher character values are impossible for UTF-8. These values can therefore be transformed to the Latin-1 Supplement Block block.

Comparison

UTF-8 |

UTF-16 |

UTF-32 |

|

Highest code point |

10FFFF |

10FFFF |

10FFFF |

Code unit size |

8 bit |

16 bit |

32 bit |

Byte order dependent |

no |

yes |

yes |

Fewest bytes per character |

1 |

2 |

4 |

Most bytes per character |

4 |

4 |

4 |

13.5.3. Complexity and Foot-Dragging¶

Unicode is a fascinating mess.

— Armin Ronacher

Using Unicode day to day is not hard on modern systems, but when one digs in a bit things get a little scarier, which tends to turn off the “graybeards who grew up on ASCII.” Unicode has:

Planes and Blocks

Full characters, and “combining characters”

e.g., É or (E and ´) in combination.

Normalization , or conversion to a standard representation. For example compare the character “n” and a combining tilde “~”, with the composed version: “ñ”.

Bi-directional text display.

Oh, up and down too!

Collation rules

Regular expression rules

Historical scripts, dead languages

Phoenician and Aramaic anyone? Cuneiform, Runes?

Duplicate code points with different semantics. e.g.:

Roman Letter V vs. the Roman Numeral Ⅴ, which is used as a number.

Greek letter Omega Ω, and the symbol for electrical resistance in Ohms, Ω.

Font availability across operating systems.

Encoding issues:

Endianness , or the order of the bytes in computer memory.

Language Issues

The real craziness with Unicode isn't in the sheer number of characters that have been assigned. The real fun starts when you look at how all these characters interact with one another.

— Ben Frederickson, Unicode is Kind of Insane

A tiny slice of what the Unicode designers had to deal with, questions and trivia to ponder:

Greek has two forms of the lower-case sigma: “ς” used only as the final letter of a word, and the more familiar “σ” used everywhere else. Is that one letter, or two?

Is the German Eszett “ß” a separate letter or a decorative way of writing “ss?” Germans themselves have disagreed over the years.

Does a diaeresis/umlaut mark over a letter e.g.: ö, make it a different letter? German says yes, English/Spanish/Portuguese say no.

WorldMaker on HN:

Language is messy. At some point you have to start getting into the raw philosophy of language and it’s not just a technical problem at that point but a political problem and an emotional problem.

Which language is “right”? The one that thinks that diaresis is merely a modifier or the one that thinks of an accented letter as a different letter from the unmodified? There isn’t a right and wrong here, there’s just different perspectives, different philosophies, huge histories of language evolution and divergence, and lots of people reusing similar looking concepts for vastly different needs.

JoelSky chimes in:

If a letter’s shape changes at the end of the word, is that a different letter? Hebrew says yes, Arabic says no.

Anyway, the smart people at the Unicode consortium have been figuring this out for the last decade or so, accompanied by a great deal of highly political debate, and you don’t have to worry about it. They’ve figured it all out already.

Lazy Transition

Back in my day, we didn't even have uppercase, and we liked it!

— Gramps on Computing (in the voice of Wilford "Dia-BEET-uss" Brimley)

You have to understand (as a newbie) what it was like around the turn of the

thentury, cough, get my teeth!

Most of the hand-wringing over Unicode back then had to do

with the fact that the existing docs with eight-bit code-pages were a mess

(who would convert them?),

Unicode was still immature and transition incomplete,

UTF-8 wasn’t invented/well-publicized yet,

16-bit encodings equaled wasted space,

and lots of legacy systems were still around that couldn’t handle it.

I think it's also worth remembering the combination of arrogance and laziness which was not uncommon in the field, especially in the 90s.

— acdha on HN

Meanwhile Americans, who by cosmic luck had one of the simplest alphabets on the planet, and coincidentally happened to own the big computing, IT, and operating systems companies thought, “what’s the problem? Things look fine from here! Hell, ASCII was good enough for Gramps, why not me?” Well yeah, not very forward thinking, but the issues listed previously were real as well.

What’s the Verdict?

An interesting judgement from etrnloptimist at reddit :

The question isn’t whether Unicode is complicated or not. Unicode is complicated because languages are complicated.

The real question is whether it is more complicated than it needs to be. I would say that it is not.

Agreed, though if we were to start over, yes, a few things might be simplified. But, let me ask you how many times a complex project landed a perfectly designed 1.0?

13.5.4. The Growth of UTF-8¶

Somewhere around 2010 perhaps 2012, not sure because it happened so gradually this author wasn’t paying attention. But, after:

UTF-8 was invented, solving:

Storage concerns

Backwards compatibility

Self-synchronization

Out with the old Windows, in with the new, w/ better support

The Web took it and ran! Many old docs were updated.

iPhone was released, w/ better support

Emoji became a sought after feature, first in Asia, then the world.

Suddenly everything was Unicode! No more hurdles to cross and very little for user or developer to do, it’s just handled by the operating system except in obscure circumstances. Most if not all of the issues preventing Unicode success had been solved. Just use UTF-8 everywhere became a rallying cry! That lead to the explosive growth seen in the chart below.

13.5.5. Now What!? AKA, Actually Working With It¶

🙈 🙉 🙊

One thing that’s really bugged me in all the Unicode docs, tutorials, and blog posts I’ve read over the years is that they teach you all the facts about it (like this chapter so far) and then walk away.

Okay ((brain filled with tons of facts about Unicode)), so now I’m sitting in front of my editor and its time to start taking advantage of Unicode! What now? When do I encode? When do I decode? What about the encode and decode exceptions that pop up in various languages?

Somehow that part never got explained. 😠 The writers were just too tired to write part two, I guess. 🤷

So, even armed with copious Unicode knowledge, as a developer you’d still find yourself patching programs here and there in a piecemeal fashion, catching errors and encoding/decoding data with no rhyme or reason. You’d never be sure you handled every case, and sometimes a fix in one part of program would break another in another part. (Part of the problem was programming languages ill-equiped for the Unicode transition, but I digress.) Sheesh.

Cue THX sound effects - SCHWIIIIIIiiinnnggg…

Kindly hit the play button to the right –>

now, wait a sec, then keep reading…

I’ll let you in on the secret. Years, and I mean years later as in close to a decade of fumbling around in the dark, the answer was finally stumbled upon—by me anyway Grrrrr…

Finally, a mental model for What. To. Do.

to solve these issues once and for all.

What was it, you ask… ??

“The Unicode Sandwich”

Top Bun - Read encoded bytes from input (file, network) and decode them into Unicode.

Meat - Process in memory as Unicode text.

Bottom Bun - Encode to bytes on output as close to the edge as possible then:

Save to disk

Write to network

That’s it for the sandwich ((burp)). Achievement unlocked, enlightenment followed, whew.

String Indexing

Unfortunately, as long as you believe that you can index into a Unicode string, your code is going to break. The only question is how soon.

— ekidd on HN

Next up are the problems with finding lengths and offsets in strings—otherwise known as indexing—that may not be composed of full but also combining characters. It’s important to remember with Unicode that:

One code point != one character

Code points are not the same as characters/graphmemes/glyphs

As ekidd mentions above, this may work fine until someone slips in an obscure character. There is not a full solution to the problem unfortunately. Strings may need to be normalized first and/or inspected programmatically.

Regular expressions are another area where Unicode complexities should be anticipated and dealt with.

13.5.6. Fonts and Errors¶

☙

To conclude this section, did we happen to mention you’ll need comprehensive space-consuming fonts to see all these amazing characters? Don’t despair, operating system vendors have been improving Unicode support every year. While they’ve been good at providing fonts for various languages, there still seem to be symbols missing here and there. Perhaps, you’ve got a copy of an older OS that can’t be updated.

For those reasons, you may find the Symbola font quite useful. It’s a symbol font that contains a fully up-to-date set of the upper blocks and planes implemented, from math to music to food to emoji! The latest version (up to Unicode v13) can be found here. Fonts for “ancient scripts” are found there as well. Enjoy.

It’s also worth mentioning that modern OSs tend to replace the emoji block with full-colored icons. This is somewhat of a mixed blessing, but a common practice now, so you’ve been warned.

Hint: Mo-ji-ba-ke

文 字

化 け

What is this Mojibake? Not the cute little ice-cream balls, not pronounced “bake” like in an oven, but “bah-keh,” and means “character transform” in Japanese.

Simply put,

it is jumbled text that has been decoded using the wrong

character encoding for display to the user,

and may look similar to this:

æ–‡å—化ã‘

While you’ll often see boxes, question marks, and hexadecimal numbers, (similar to the lack of font support mentioned above) additional corruption may occur. This may result in a loss of sync over where one character starts and ends, display in an incorrect writing system, or worse.

¡d∩ ƃuᴉddɐɹM

Godspeed, Unicode. We await your frowning pile of poo with open arms.

“There ain’t no such thing as plain text.”

“Always declare an encoding when creating or handling textual data.” (Professionals do.)

The history of telecommunications is interesting and a precursor to computing and networking.

7-bit ASCII is a fundamental industry standard and backward compatibility a bridge to earlier times.

The “Control” key on your keyboard maps keys to ASCII control values by clearing the top two bits of the seven from the corresponding letter.

The Delete key once was called Rubout because it punched all the holes in paper tape or punch card, directing the reader to ignore it.

There were hundreds of 8-bit ASCII extensions now considered a “dead-end.” Two of the most famous, that live on were ISO 8859 and Windows-1252.

Unicode is an ambitious attempt to catalog all the symbols used by humans, currently and throughout history into a single reference, called the Universal Character Set. (Resulting in:)

“Unicode is a fascinating mess.”

Unicode is not limited to 16-bit encodings, which are insufficient to solve the full problem.

UTF-16 is the most compact encoding for modern texts in “CJKV” (Asian) languages.

UTF-8 is the best all around encoding for other uses, helping propel it to incredible popularity. Use it!

When programming, use the “Unicode Sammich” model to remember how to handle textual data properly.

The Symbola font is highly useful when an OS vendor has let you down.

See also: Resources

about the Unicode Consortium

How Python does Unicode James Bennett (good piece on an internal implementation of Unicode)

UTF-8 as Internal String Encoding, Armin Ronacher

Absolute minimum… (Oldie but Goodie) Spolsky’s now somewhat-obsolete rant about developer/industry foot-dragging. We’re now over that hump.

Security:

The Oral History Of The Poop Emoji (Or, How Google Brought Poop To America)

Search: