4. Construction¶

BUILD MORE FARMS!!

— Warcraft III: Reign of Chaos

The construction phase of the life cycle is the central activity of a software project, where software is built. During construction the applications and libraries needed to complete the project are written, according to the requirements and designs created in the previous phases. Not surprisingly, this phase is not skimped-on or glossed-over like the others—as it’s normally not possible to and still get paid.

Fig. 4.1 How the programmer wrote it. ¶

The phases will begin to overlap at this point, necessitating a multi-disciplinary approach. While building the software, we’ll need to revisit requirements found to be impractical or assumptions found incorrect. Functionality that was too minor to fully design and document up front will need to be fleshed-out. Testing, fixing, and retesting code will need to be done until quality objectives are reached. Project resources need to be managed and results communicated. Meanwhile engineers apply their Computer Science chops to various challenges, aiming to tackle them in the most efficient manner.

A number of techniques are described below that have been found

to make development more productive,

the resulting software more reliable and maintainable.

See also: Books

Code Complete: A Practical Handbook of Software Construction, 2nd Edition, by Steve McConnell

The “bible” of software construction practices and techniques, with additional discussion of other life-cycle phases. If you are serious about software development you’ll want a copy. It’s arguably the best choice if you’ve only time for a single book—the best “bang for the buck” of the recommended group.

4.1. Considerations¶

Construction is an activity in which the software engineer has to deal with sometimes chaotic and changing real-world constraints, and he or she must do so precisely.

— IEEE SWEBOK Guide V3.0, Ch. 3

In the previous chapter on Design, we discussed use of the Right Tools for the Job, fundamental construction building-blocks such as operating systems and programming languages. These details are also a construction concern.

We’ll also consider the “whole system” a project may define. A software system is defined by Wikipedia as “a system of intercommunicating components based on … a number of separate programs, configuration files, … and documentation” .

4.1.1. Minimization of Complexity¶

After one has played a vast quantity of notes and more notes, it is simplicity that emerges as the crowning reward of art.

— Frédéric Chopin

Nocturne by Chopin ♪

Humans are able to keep only a few things in their head at once (small skulls, remember?) Every surprise, hack, unfixed bug, or exception to a rule in your project is an item that needs to be remembered. While each is insignificant when there are but a few, confusion explodes in larger numbers.

Compounding Complexity

If these “loose-ends” are not actively hunted and tied-up early they’ll compound, with interest. Work begins to slow to a crawl, and the project may spin out of control—while developers comb through the codebase dealing with bugs in three different layers simultaneously (see Fig. 4.2).

4.1.1.1. Essential vs. Incidental¶

Simplicity is achieved in two general ways: minimizing the amount of essential complexity that anyone's brain has to deal with at any one time, and keeping accidental (incidental) complexity from proliferating needlessly.

— Steve McConnell, Code Complete (Ch. 5)

In the famous “No Silver Bullet” essay discussed previously, Fred Brooks made a distinction between essential complexity and accidental complexity (the latter clarified later as incidental). Essential complexity is the complexity of the problem space you’re working in, i.e. functional requirements, algorithms, etc. Consider a high-frequency algorithmic trading platform for financial markets. The complexity of the essential layer of the problem may only be reduced through research and deep contemplation.

Fig. 4.3 Ten minute+ program load times. :-/¶

Incidental complexity on the other hand, is a consequence of the crudeness of our development tools and platforms. The best they can do is “get out of the way” and not slow down work on the essential, or core problems. Not too long ago, programming was done on punched cards and later line editors (one line at a time on screen). As you can imagine it took a very long time to write a complex application that way—a large portion of which had nothing to do with the core problem itself. Today we’ve got incredibly faster hardware, more productive languages, syntax highlighting, code-completion, “4k” monitors, and distributed version control. Yet complex projects are still hard if not harder, as reductions in incidental complexity allows us to aim a bit higher.

While incidental complexity is reduced with better tools, it is often increased through poor design choices. In the news recently was the piece, “Medical Equipment Crashes During Heart Procedure Because of Antivirus Scan.” The problems that occurred are not inherent to medical devices, but rather a poor design and implementation of the solution.

4.1.1.2. Readability¶

Any fool can write code that a computer can understand. Good programmers write code that humans can understand.

— Martin Fowler

A principle also known by the phrase, “write programs for people first, computers second.” When programming, always opt for simple and readable techniques over clever ones. Not only will this help others to quickly understand the code, you’ll be helping yourself as well. Months from now when you revisit it (without benefit of context) the reason will become clear. Be courteous to yourself and others—write readable code. The basics follow:

Courtesy Khan Academy.

Modules, variables, functions, classes, methods, etc. should have:

Appropriate, consistent, and concise names

Focus, single purpose

Consistent code formatting

Limited file, function, and line length

Limited nesting

Concise documentation and comments

While the book Code Complete is the comprehensive resource in this area, many tips can also be found at stack overflow. Code written to be readable may also be said to be self-documenting .

Simpler code has fewer bugs. Simpler code is more readable. Simpler programs are easier to understand, easier to use. This is important in how you design your programs or APIs as well as how you write them.

Use boring technology. Write boring code.

— Jan Schaumann

Unfortunately, some fraction of developers will balk at putting effort into readability; those that derive self-worth from their cleverness. You may have encountered this type of geek (often found in their natural habitat at Slashdot ) who eschews simplicity and argues that usability should not be improved, lest the (L)users get too comfortable and lazy. Best to let them go if success is a goal.

Tip: Reading vs. Writing

Programs must be written for people to read, and only incidentally for machines to execute.

— Abelson/Sussman, "Structure and Interpretation of Computer Programs"

While code is written and rewritten a few times over its lifetime, it will be read dozens, if not hundreds of times. Make an extra effort at clarity to help future readers.

See also: Online Resources

Specific advice on readability can be found in the classic The Elements of Programming Style by Brian W. Kernighan and P. J. Plauger, as well the style guides list under Coding Standards below.

4.1.1.3. Do One Thing, Once¶

A class should have only one reason to change.

— Uncle Bob

When one unit of code (modules, classes, functions) is associated with multiple tasks it can be difficult to reason about and test. It becomes more likely that work to update or fix one task may adversely affect others.

This

single responsibility principle ,

promotes a separation of concerns and helps to reduce coupling,

or how closely connected two units are.

DRY: Don’t Repeat Yourself

Every piece of knowledge must have a single, unambiguous, authoritative representation within a system.

— Andrew Hunt, David Thomas, The Pragmatic Programmer

Conversely, when multiple units are associated with one task and changes occur, it’s quite likely the additional copies will be overlooked and fall “out of sync.” This idea is known as “Don’t Repeat Yourself,” or the D.R.Y. principle , which is “aimed at reducing repetition of all kinds.” Both situations lead to easily preventable bugs. Therefore each unit should do one thing, and one thing only, once.

4.1.1.4. Least Surprise¶

Make a user interface as consistent and as predictable as possible.

— Jon Bentley, Programming Pearls

Also called “least astonishment” , the principle recommends doing the least surprising thing, from software design to user interfaces. Designs should work consistently with platform idioms and how typical users expect them to work. From “Organize by the principle of least surprise,” courtesy Eric Scrivner :

Ask yourself, “How would I organize this so that someone using Notepad with a good grasp on the programming language would be able to find and edit any arbitrary component?” This is ultimately how your codebase will seem to every new person who encounters it. For example, if you are asked to modify the function that geocodes a location and you have no experience with a code base it’s reasonable that you’d look in app.geolocation.utils as a first approximation. You’d be suprised if instead it were somewhere like app.auth.models. The first example follows the principle of least surprise. Reduce the mental strain on yourself and others by sensibly organizing components into well named modules.

See also:

Software Complexity Is Killing Us, by Justin Etheredge

Convention over configuration, aims to reduce the number of decisions that a developer is required to make.

4.1.2. Reuse¶

It’s harder to read code than to write it.

This is why code reuse is so hard.

— Joel on Software, "Things You Should Never Do, Part I"

Software reuse is another essential strategy to reduce complexity and conserve resources. It’s almost never a good idea to rewrite common functionality that has already been written, tested, and debugged, as it’s unlikely you’ll have the endurance to implement significant edge cases. The corollary to this strategy is that developers should be working on the parts of the project unique to it.

There are two facets of reuse, reuse of other’s code and, designing your code to be reused by others. Make sure to do both. One exception is when the component is fundamental to your business—don’t outsource a core function, common or not.

4.1.3. Project Size¶

Extrapolation of times for the hundred-yard dash shows that a man can run a mile in under three minutes. (100m/1600m)

— Frederick P. Brooks, Jr., The Mythical Man-Month (Ch. 8)

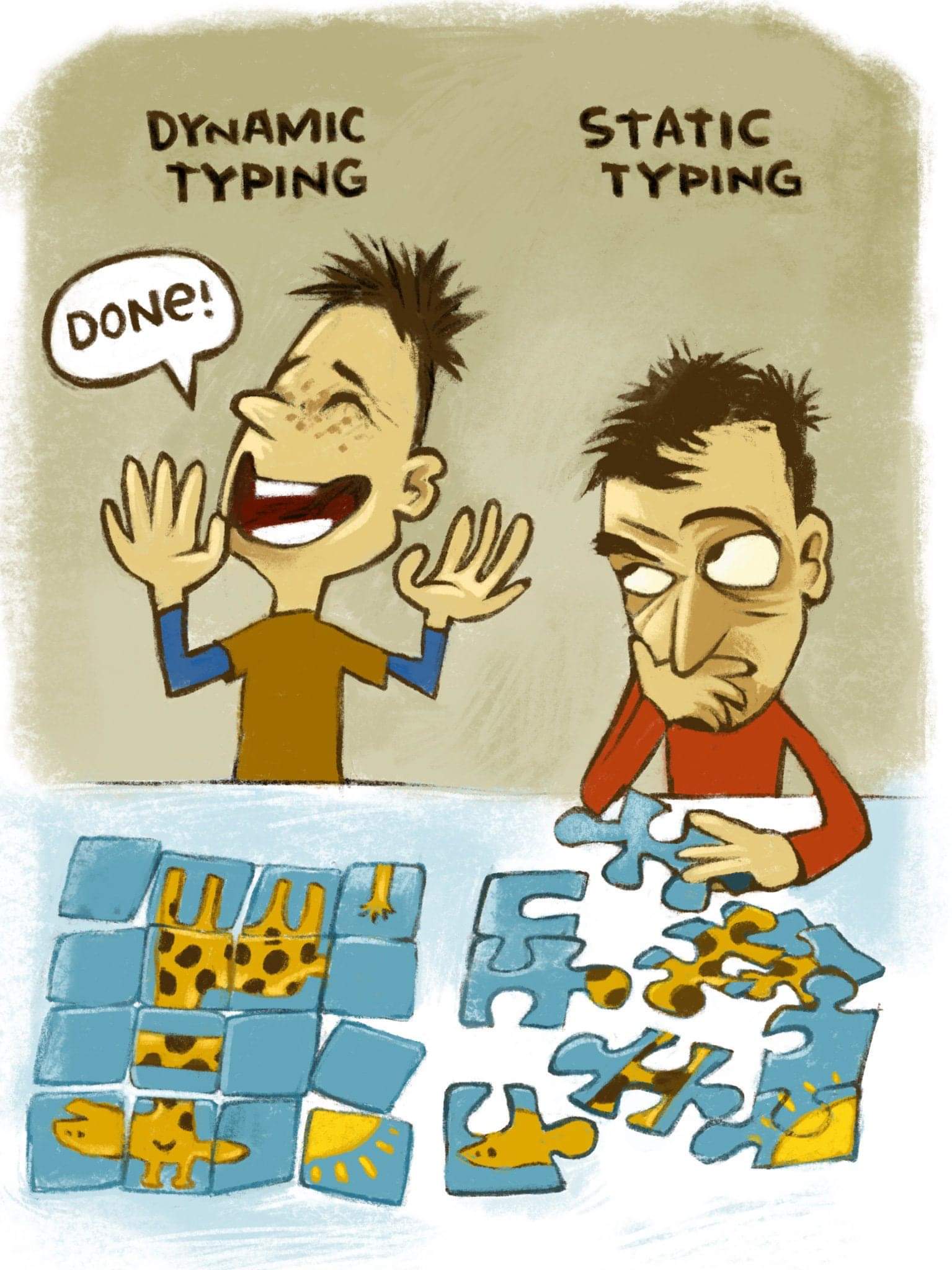

Smaller projects and systems are often straightforward for individuals to understand and reason about. It often makes sense to use a rapid-development language with a minimum of overhead and drudgery involved. Typically this allows the developer to skip type-definition, low-level programming tasks such as manual memory allocation, and compilation steps. Code is often structured using a procedural paradigm for sake of simplicity. These choices maximize developer productivity early on, but start to “show cracks” of strain if/when/as the project grows.

Large systems on the other hand are difficult for a single person to keep in mind at once. Consequently, as projects scale in size and complexity, the number of defects per line of code rises. (Capers Jones, “Program Quality and Programmer Productivity”, 1977, 1998)

All other things being equal, productivity will be lower on a large project than on a small one, [and] a large project will have more errors per thousand lines of code than a small one.

— Steve McConnell, Code Complete (Ch. 27)

Team size and skill-level are important factors as well. As a team grows in number, members will differ in both experience and familiarity with the project; people will come and go, and not all of them will be superstars. 😁 Correspondingly, scalable development practices become more and more and more helpful as the project progresses—lowering the learning curve though documenting and “onboarding,” and preventing classes of trivial errors, e.g. passing incorrect arguments to methods.

Such techniques, avoided as overhead on smaller projects, shift from burden, to recommendation, then to requirement as the project scales. What techniques exactly, you may be asking?

Note: Scalable Development Practices

Fig. 4.6 An excellent illustration, author as yet unknown.¶

Use of statically-typed languages, or addition of optional typing. These reduce programming errors, by finding them earlier (at compile time rather than run time), at the cost of additional reading and writing time.

Use of Object-oriented and functional paradigms to further enforce bondage and discipline. Umm, err integrity.

Use of static-analysis and lint-ing tools to warn about problematic code early.

Initiation and expansion of developer testing activities and formal QA activities.

Separation of functionality into tiers and distributed designs.

Although these practices target project scalability rather than performance, some practices may improve performance as well.

Tradeoffs

These additional rules tend to limit your top performers, while boosting low performers. The relationship is illustrated at right. Simple development techniques (in red) tend to have a linear complexity cost as a project scales in size. They are cheaper at the beginning, and therefore easier to get started with. Scalable techniques (in blue) add overhead early on a project, but scale better (closer to a logarithmic manner) as it grows.

Note the cross-over point near the center of the graph. Estimation of which side of the scale your project will land should heavily inform decision-making regarding project design and construction strategy. Keep in mind that successful projects expand over time.

4.1.4. On Performance¶

We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil.

— Donald Knuth

Most programs spend the majority of their time in tiny, repetitive parts of the code base. As Knuth and others have found, approximately three percent of the total. To find out where, you’ll need to measure execution time with a profiling tool .

Premature Optimization

You can't tell where a program is going to spend its time. Bottlenecks occur in surprising places, so don't try to second guess and put in a speed hack until you've proven that's where the bottleneck is.

— Rob Pike

So, if you find yourself looking around the code looking for “things to speed up,” there’s a 97% chance you’re wasting time. The code you’re speeding up now may even be thrown away later. The process often hurts readability as well—most code optimization techniques are hard to grok at first glance, and may need extensive comments to explain. There may be a better way—rethinking the problem. From Code Complete (Ch. 25), Steve McConnell:

Performance is only one aspect of overall software quality, and it’s usually not the most important. Finely tuned code is only one aspect of overall performance, and it’s usually not the most significant. Program architecture, detailed design, and data-structure and algorithm selection usually have more influence on a program’s execution speed and size than the efficiency of its code does.

Optimization

Measure. Don't tune for speed until you've measured, and even then don't unless one part of the code overwhelms the rest.

— Rob Pike

Once you’ve found the hot-spots with a profiler, the keys to optimizing are the following:

Do less

Find a better algorithm for the problem, remember Big O? .

Learn more efficient language idioms.

Cache expensive results

Lastly, port unit(s) to a faster/lower-level language such as C

From High Performance Python (Gorelick/Osvald):

Sometimes, it’s good to be lazy. By profiling first, you can quickly identify the bottlenecks that need to be solved, and then you can solve just enough of these to achieve the performance you need. If you avoid profiling and jump to optimization, then it is quite likely that you’ll do more work in the long run. Always be driven by the results of profiling.

Tip

Make it work, make it right, then make it fast.

— Proverb

Shlemiel the Painter

What about when performance is clearly unacceptable? Perhaps a “Shlemiel the Painter’s” algorithm (courtesy JoS) has been implemented:

Shlemiel gets a job as a street painter, painting the dotted lines down the middle of the road. On the first day he takes a can of paint out to the road and finishes 300 yards of the road. “That’s pretty good!” says his boss, “you’re a fast worker!” and pays him a kopeck.

The next day Shlemiel only gets 150 yards done. “Well, that’s not nearly as good as yesterday, but you’re still a fast worker. 150 yards is respectable,” and pays him a kopeck.

The next day Shlemiel paints 30 yards of the road. “Only 30!” shouts his boss. “That’s unacceptable! On the first day you did ten times that much work! What’s going on?”

“I can’t help it,” says Shlemiel. “Every day I get farther and farther away from the paint can!”

Bring the damn paint can. In other words, for best performance, avoid unnecessary work whenever possible.

4.1.5. Constructing for Verification¶

Trouble writing tests?

The problem's not in your test suite. It's in your code.

— Sergey Kolodiy, Toptal

Writing code that can be easily tested through automation is another important strategy to reduce complexity. Below are a number of strategies to facilitate that goal.

Writing Testable Code, courtesy Miško Hevery @google:

Avoid significant work in the constructor of an object.

Avoid digging into collaborators (other objects).

Avoid global state and singletons, which make code brittle and dependent.

Avoid classes that do too much.

Writing Testable Code, courtesy tvanfosson @stackoverflow:

TDD—Write the tests first, which forces you to think about testability and helps write the code that is actually needed, not what you think you may need.

Refactoring to interfaces – makes mocking easier

Public methods virtual if not using interfaces – makes mocking easier

Dependency injection – makes mocking easier

Smaller, more targeted methods – tests are more focused, easier to write.

Avoidance of static classes

Avoid singletons, except where necessary

Avoid sealed classes

Finally, aim for “pure” (side-effect free) functions. Keep the number of dependencies low as testing possibilities expand with each addition.

4.1.6. Test-driven Development¶

Write the tests you wish you had. If you don't, you will eventually break something while refactoring. Then you'll get bad feelings about refactoring and stop doing it so much. Then your designs will deteriorate. You'll be fired. Your dog will leave you. You will stop paying attention to your nutrition. Your teeth will go bad. So, to keep your teeth healthy, retroactively test before refactoring.

— Kent Beck, TDD: By Example

Test-Driven Development (also known as test-first programming or by the initials TDD), is a methodology that can “detect defects earlier, and correct them more easily than traditional programming styles.” In short, write the tests first. Then write the smallest amount of code necessary to make the tests pass. Resist the urge to write more code. Refactor and repeat until tests conform to requirements.

Diving In…

Author Mark Pilgrim lists the benefits of unit-testing/TDD clearly in his book, Dive Into Python :

Before writing code, writing unit tests forces you to detail your requirements in a useful fashion.

While writing code, unit tests keep you from over-coding. When all the test cases pass, the function is complete.

When refactoring code, they can help prove that the new version behaves the same way as the old version.

When maintaining code, having tests will help you cover your ass when someone comes screaming that your latest change broke their old code.

When writing code in a team, having a comprehensive test suite dramatically decreases the chances that your code will break someone else’s code, because you can run their tests first.

Note that while this is a valuable strategy to improve Software Quality, it creates extra work when software designs have not yet solidified, or are not very good and need to be changed. Still, the changes will happen with increased confidence and restful nights.

See also: Books

Test Driven Development: By Example, by Kent Beck

Believe it or not, a breezy, fun book on testing first (see quote above).

Warning: TDD a Silver-Bullet?

Is it or isn’t it? TDD is often portrayed as a silver-bullet to whatever ails. While it will often improve an established project, it can slow down an exploratory one: #NoTDD

If you look at who were the early TDD proponents, virtually all of them were consultants who were called in to fix failing enterprise projects. When you're in this situation, the requirements are known. You have a single client, so you can largely do what the contract says you'll deliver and expect to get paid, and the previous failing team has already unearthed many of the "hidden" requirements that management didn't consider. So you've got a solid spec, which you can translate into tests, which you can use to write loosely-coupled, testable code.

— nostrademons

TDD can miss Emergent Properties as well. As always, consider the context in which TDD is applied. Are we building a social-network or fighter avionics?

4.1.7. Behavior-driven Development¶

Behaviour-driven Development is about implementing an application by describing its behavior from the perspective of its stakeholders.

— Dan North

Or BDD, is an extension of Test-driven Development and Domain-Driven Design that revolves around writing down Requirements from the perspective of the client, using the natural and domain-specific languages (or jargon ) understandable to them. It is the same type of work you’ll do when writing acceptance tests, and therefore substantially related.

See also: BDD Philosophy and Behave Tool

“Introducing BDD” , by Dan North

A great discussion with code examples can be found at the site of the “behave” tool .

4.1.8. Feedback Loops¶

“Yep,” a professional programmer told me, “we used to keep a sit-up board in the office and do sit-ups while we were doing compiles. After a few months of programming I had killer abs.”

— Joel Spolsky, Blogger/co-founder StackExchange

Fig. 4.8 Got Feedback?¶

Simply, the speed of a feedback loop is determined by the length of time it takes between taking an action, and being able to observe its results. While a short feedback loop has little effect, a large one can cripple productivity.

You may have never paid it much thought as a student;

hardware is fast and collaboration discouraged—but, has your computer ever run out of memory?

A modern computer will respond very quickly to keystrokes;

hit keys and symbols appear on the screen immediately.

When it starts

“swapping”

however,

a delay of a second or more is added to each response.

The computer quickly becomes unusable,

due to the high-latency of feedback.

Feedback loops are common everywhere in software engineering and project management. They occur during building, debugging, communicating with colleagues, and planning, among other activities. When building software, the compilation and linking process of a large app can take long enough that there’s even an XKCD comic on the subject. Some languages are known for inefficient compilation (such as C++), but others have been designed for build speed (Pascal, Golang, arguably interpreted languages), hugely “uncrippling” daily productivity. Does it take five minutes to add a missing semi-colon to your project? If so, kiss the schedule goodbye. One reason many scripting languages are so productive is that they often include a live interpreter at your console, aka a REPL (read-eval-print loop)—resulting in instant feedback.

Getting feedback from people can be a huge bottleneck as well. Does another department have to get involved in every code change you make? See you in hell, my friend (haha). Maybe you’re in North America working with a team in India. Unless one of you takes the night shift, your feedback loop is now one day. What, your questions/instructions were misunderstood? Make that two days. :-/ Time to project completion just explodes from there.

Anecdote Time - Feeback Hell

The author once had such a “contract from hell” where the customer was in a secure government facility—no internet allowed, thanks. It took about two hours (and two pages) to write the first draft of a script needed to manage their otherwise unremarkable system. Experience had shown there’d normally be another hour or two of back and forth to adapt it locally.

Well, this job was a bit different. The script needed to be emailed to a technician in Kansas City who’d send it on to an only mildly-technical customer, who’d sneaker net it over to its given host. He’d walk the script over on a thumbdrive, run it, and watch what happened. He’d then try to describe the result in business-ese, or perhaps make a screenshot (and paste it into a Word doc, d’oh), then mail it back. He was busy so he’d have time to do that once a week or so, sigh. So, that was the feedback loop—not once every few seconds, but once a week. Care to guess how long that “four hour” project eventually took from start to finish? Wait for it… five months! (I shit you not). In the final weeks, the guy in KC broke down and bought a flight ticket to the install site. Sure enough we made a breakthrough a few days later and he had to cancel it.

Remember kids—if you’d like to stretch a four-hour job out to five months, a humble weekly feedback loop is your answer!

See also: Online Resources

Another example from consultant and user foxfired on HN. (Twenty hours stretched to a month and a half!)

Harnessing the Power of Feedback Loops, an interesting discussion on feedback loops in the rest of the world and how to use them to change behavior.

4.2. Process¶

In general, software construction is mostly coding and debugging, but it also involves construction planning, detailed design, unit testing, integration testing, and other activities.

— IEEE SWEBOK Guide V3.0 (Ch. 3)

Fig. 4.10 Stages of Construction¶

The major tasks of the construction phase are as follows:

Programming

Developer testing

Debugging

4.2.1. Programming¶

K.I.S.S.: Keep it simple, stupid!

— Kelly Johnson

Programming is the process of writing down the instructions to solve a problem for the benefit of end users and communicating with others on the team. The word “coding” is often used for this activity, however it implies a mechanical transcription or translation of some sort, where as the words programming, development, or engineering acknowledge the much more creative process that it is. A number of development techniques, tools, and best-practices found effective are described below.

4.2.1.1. Tools¶

The first tool a developer must master is a programmer’s text editor , whether standalone or part of an integrated development environment (IDE ). Editors may be broken down further into terminal or graphical user interface (GUI ) categories. Terminal editors usually have unique and sophisticated keystroke command-languages that are quite efficient but take a while to learn. The major contenders are vim and emacs.

Vim (Vi IMproved) is a lightweight and powerful editor and installed by default on all Unix-like operating systems (often reachable remotely via ssh ), making it almost universally available and needed in a pinch. Accordingly, everyone should be familiar with inserting text (type: i, then modify text), saving and exiting from it (type: :wq). Do yourself a favor and take a short vim tutorial at your earliest convenience.

Addendum: just read that vim key-bindings work well on a phone keyboard.

Emacs is extremely extensible and unique in that it is as large and powerful as an IDE but typically runs in a terminal.

GUI editors are understandably better integrated into modern desktops, and have superior graphical capabilities. They use standard GUI key-bindings which speed learning and may reduce context-switching between apps, though are a bit slower at raw editing-throughput than terminal editors like vim. They don’t run efficiently over the network (X or Remote Desktop ), though editing files over a network-mount (NFS , Samba , sshfs ) is a good option. Examples:

Sublime Text is a popular, powerful, and proprietary editor.

Geany is a lesser-known lightweight FLOSS GUI editor & environment with a number of powerful plugins available. Recommend for beginners and experts alike who value the KISS rule.

IDEs bundle powerful productivity-enhancing features such as an editor, SDKs , tools like code-completion, static-analysis, “search everywhere,” debugging, and support for refactoring across a whole project. They are often but not always centered on a single language or platform, though almost all additionally support web and templating languages these days. Examples:

|

|

Profiling tools monitor code as it runs and record how long each unit takes in a cumulative fashion, directing attention to where optimization is needed.

Analysis tools (both static and dynamic) are available for major languages and platforms. These automated tools warn about actual and potential problems in a code base, supporting project scalability. Notable examples are JSLint, JSHint , or ESLint for Javascript and Pyflakes for Python. Memory leak and debugging tools such as valgrind are essential to avoid bugs when manually allocating memory in a language like C.

Other important tools a developer should know are diff and build tools like make . Code formatters like *tidy and golang’s format are useful as well.

Command-line Shells

Proficiency with a command shell is a must as a programmer. A clicky-pointy-only developer will be laughed out of the room. ;) Choose one of the shells below and read through its full documentation:

bash (default)

zsh (powerful)

fish (friendly)

cmd (legacy), powershell (Windows)

You’ll be glad you did. (Once the author completed one of these shell manuals and worked the techniques into his repertoire, mere mortals started treating him as if he was some kind of god).

Tip

It’s your job as a professional to keep on top of the best tools for platforms you target, and across the industry.

4.2.1.2. Coding Standards¶

There are two hard things in computer science: cache invalidation, naming things, and off-by-one errors.

— Phil Karlton, Martin Fowler

Coding standards are a way to improve and promote consistency of a project on its styling layer. Also known as a style-guide, the standards should encompass naming conventions , layout, and indentation rules, among other things.

While we’d all like to do things “our way”, we recognize that a project with five different coding styles is worse than a single style, even if it’s sub-optimal. When all the code in a large codebase is in one standard form it is easier to understand, reduces distraction, and can emphasize bugs—helping them stick out . Following a common style guide for your platform helps new team members get their bearings and find their way around your code more quickly.

Comments

Don't comment bad code—rewrite it.

— Kernighan and Plauger, "The Elements of Programming Style"

A minor note on comments . Comments should not reiterate code but explain the why behind its design. Readable code will explain the what and how, therefore prefer good names and documentation over comments.

Error Messages

Further, it’s important to write meaningful error messages that give the user an idea how to overcome problems. There are few things that so clearly divide professional software from not than the quality of its messages. Return status codes compliment, but do not obviate useful error messages.

4.2.1.3. Technical Reviews¶

…I believe that peer code reviews are the single biggest thing you can do to improve your code.

— Jeff Atwood, co-founder StackExchange

Fig. 4.11 “Thank you, Mr. Spock.”¶

Peer review of project code is a powerful technique to improve project quality and velocity, piercing developer blind spots and fixing problems early in the development process. Constructive reviews develop junior engineers into senior engineers, and may even break senior engineers of bad habits they’ve grown accustomed to.

Technical reviews has been found to be quite effective. In one study, errors were brought down from 4.5% to 0.82% per line of code (Freedman and Weinberg 1990 ). Author Karl E. Wiegers lists additional benefits :

Less time spent performing rework

Increased programming productivity

Better techniques learned from other developers

Reduced unit-testing and debugging time

Less debugging during integration and system testing

Improved understanding/communication regarding system

Shortened product development cycle time

Reduced field service and customer support costs

Formal Inspections

Reviews are more cost effective on a per-defect-found basis because they detect both the symptom of the defect and the underlying cause of the defect at the same time. Testing detects only the symptom of the defect; the developer still has to find the cause by debugging.

— Steve McConnell, Rapid Development (Ch. 4)

The precursor and formal analogue to code review is the Fagan Inspection , invented not by Steely Dan founder Donald Fagen , but Michael Fagan of IBM, and said to remove 60-90% of errors before the first test is run. (Fagan 1975) On inspections, courtesy “The Silver Bullet No-one Wants to Fire,” OSEL, Oxford Software Engineering Ltd. :

A widely applicable, rigorous and formal software engineering QC technique.

Inspection of any document when it is believed complete and ready for use.

Finds defects (directly – unlike testing, which happens later, and only reveals symptoms)

Wow, why don’t we use that for everything, you’re probably asking? Well, it’s expensive to have multiple people involved in a formal process. Also, it’s been found that reviewers tire after about an hour (Cohen 2006 ). A lighter-weight code review process can get us the majority of the benefit at a lower-cost, and fewer dead trees. If your reliability requirements are very strict however, this will be one the tools you’ll reach for.

Reference

Fagan, Michael E. “Design and Code Inspections to Reduce Errors in Program Development,” IBM Systems Journal, vol. 15, no. 3, 1976, pp. 182-211.

Fagan, Michael E. “Advances in Software Inspections,” IEEE Transactions on Software Engineering, July 1986, pp. 744-751.

Tip: Humanizing Peer Reviews

How to avoid hurt feelings when reviewing, by Karl E. Wiegers:

Ask questions rather than state facts when critiquing.

Scratch each other’s back w/constructive criticism.

Practice egoless programming, separate the work from the person.

Direct comments to the work product, not to the author.

See also: Online Resources

Need some help on a side-project? The Code Review StackExchange “is a question and answer site for seeking peer review of your code.”

No nitpicking in code reviews , Matt Layman

4.2.1.4. Pair Programming¶

Pair programming is a dialog between two people trying to simultaneously program (and analyze and design and test) and understand together how to program better. It is a conversation at many levels, assisted by and focused on a computer.

— Kent Beck, Extreme Programming Explained

Pair programming is two developers working “uno animo” (with one mind, as Fred Brooks liked to write) at a single workstation, delivering the benefits of code review immediately. While overkill for mundane jobs it can be quite useful for the hardest, most important, or architectural portions of a project.

The “driver” writes the code, while the observer (or navigator) actively checks it and thinks strategically about design and direction. The two change places regularly. A number of helpful tips for Pair programming can be found in Code Complete by Steve McConnell (Ch. 21):

Coding Standards are essential in order to avoid focus on incidentals.

Keep a matching pace, or benefits are limited.

Rotate work assignments, so developers learn different parts of the system.

Don’t force people who don’t like each other to pair.

Don’t pair two newbies together.

Pair programming can improve code quality, shorten schedules, and promote collective ownership.

See also: Online Resources

Pair programming is overkill for 80% of programming tasks but is useful for the right 20%. Do you really need a co-pilot to pull the car into the garage? In other words, not every software task is akin to brain surgery or flying a 747.

— Michael Daconta, Software Engineer

The book Extreme Programming helped popularize Pair Programming, which we’ll explore further in a future chapter.

InfoQ has a great introductory article on the subject.

4.2.1.5. Refactoring¶

Refactoring (noun): a change made to the internal structure of software to make it easier to understand and cheaper to modify without changing its observable behavior.

Refactor (verb): to restructure software by applying a series of refactorings without changing its observable behavior.

— Martin Fowler

The quote above assumes some knowledge,

so let’s back up a bit.

What does factoring mean?

Remember back to algebra when you had to find common factors?

Meaning, to remove the parts in common and consider them separately?

For example, let’s find a factor of 2y + 6,

courtesy the

math is fun

site:

Both 2y and 6 have a common factor of 2:

2y is 2 × y

6 is 2 × 3

So you can factor the whole expression into:

2y + 6 = 2(y + 3)

The common factor 2 has now been “factored out” of the expression;

it’s the same idea when factoring code.

First take the parts in common,

create a function or method to hold them

(making it bit more general with parameters as needed),

then replace the duplicated code with calls to new factored code instead.

The re- in refactoring reminds us to repeat the process.

Dive into Refactoring…

Author Mark Pilgrim explains refactoring with “great skillful-skill” in his book, Dive Into Python :

Refactoring is the process of taking working code and making it work better. Usually, “better” means “faster”, although it can also mean “using less memory”, or “using less disk space”, or simply “more elegantly”. Whatever it means to you, to your project, in your environment, refactoring is important to the long-term health of any program.

Code is never perfect on the first draft, and probably not on the tenth. Clarity, reuse, doing one thing, principles, performance, testability, and maintainability are all goals to be achieved through refactoring.

4.2.1.6. Defensive Programming¶

Defensive programming is similar to defensive driving indicating that you need to take precautions and protect yourself from other’s mistakes as well as your own. That means:

Protecting against invalid inputs, by returning an error code, or raising an exception.

Use of assertions , statements expected to be true or fail immediately, instead of several steps downwind.

Use of constants instead of variables when possible.

Use of checksums during communication or storage.

Proper initialization of variables in low-level languages.

Use of strn* functions rather than str* in C to prevent buffer overruns.

Turning on extra compiler warnings, enabling strict modes.

Using scalable practices, i.e. adding types, running analysis tools.

Use of TDD.

Canonicalization of filesystem paths, aka converting them to absolute paths to prevent malicious input.

Watchdog timers to detect error conditions and restarts.

Tip: Don’t Hide Errors

When programming defensively in library code (good), you may be tempted to go an extra step to avoid an error, aka “swallowing” it (bad). Errors must be thrown immediately so that any bugs can be found and fixed as easily as possible, otherwise they’ll be postponed until “god knows when” and potentially lost. In production software crashing is normally not an option—instead catch the error at the application level and log it extensively—never hide it. See “Exceptions in the rainforest” for more details.

See also: Online Resources

More on defensive programming, centered on C programming at Dr. Dobbs.

4.2.2. Developer Testing¶

A reasonable combination of unit and integration tests ensures that every single unit works correctly, independently from others, and that all these units play nicely when integrated, giving us a high level of confidence that the whole system works as expected.

— Sergey Kolodiy, Toptal

To ensure a baseline of quality, tests that developers perform include unit, integration, and system testing, with each level of testing built on the previous.

As we will discuss below and the Quality Assurance phase, testing is necessary to deliver a professional product. When designed with a concern for quality up-front, constructed for verification, and supported by scalable practices these steps should go swimmingly .

4.2.2.1. Unit Testing¶

It takes three times the effort to find and fix bugs in system test than when done by the developer. It takes ten times the effort to find and fix bugs in the field than when done in system test.

Therefore, insist on unit tests by the developer.

— Larry Bernstein, Bell Communications Research

A unit test is a small test, narrow in scope, that exercises one unit (think function or method) of code independently from other units. Each test should validate the unit in a single case and run quickly. Ideally, these tests should be written by the author of the unit rather than an external team for efficiency reasons, as the code and its design will be fresh in-mind.

Common types of unit tests include:

Positive, testing that the unit responds properly to valid inputs.

Negative, testing the unit responds properly to invalid inputs by returning an error signal, raising an exception, etc, rather than a wrong answer.

Boundary, testing around the limits of a unit. E.g. for an integer input, testing 0, midpoints, the highest/lowest numbers, and exceeding them.

Often related are off-by-one errors.

“His prices are (criminally) INSANE!”

One limitation to developer testing is that developers tend to write positive

tests,

assuming code will work.

There’s not a lot of time on a busy project to brainstorm on what those “crazy

users” might try.

Simply realizing this may help expand your repertoire,

however.

Tip: Testing Difficulties

Having trouble writing tests? As mentioned in Constructing for Verification, what makes code hard to test is not a lack of “secret” testing techniques, but rather poorly-designed code.

See also: Online Resources

Unit Tests, How to Write Testable Code and Why it Matters, by Sergey Kolodiy, Toptal

This is an well-written, and informative article on how to lift your code to the next level. C# based, but widely applicable.

Testing your code

There are a number of valuable tips in this tutorial and checklist. Python based, but widely applicable.

4.2.2.2. Integration Testing¶

Integration testing verifies the interaction of multiple (previously-tested) units as a group. Larger components and modules of your project are tested to make sure they work together correctly, by exercising the interfaces and communication-paths between them. The testing may proceed from a top-down or bottom-up approach. Integration may require prerequisites such as a populated database or network services to be available.

Don’t wait long to integrate and test, as problems accumulate over time, their causes harder to pinpoint. Consequently, a daily build and “smoke test” has become standard in the industry to reduce integration risk.

From Code Complete, Steve McConnell, Ch. 29, “Daily Build and Smoke Test”:

When the product is built and tested every day, it’s easy to pinpoint why the product is broken on any given day. If the product worked on Day 17 and is broken on Day 18, something that happened between the two builds broke the product.

Daily builds can reduce integration problems, improve developer morale, and provide useful project management information.

See also: Online Resources

Daily build and Smoke Test, by Steve McConnell, Best Practices, IEEE Software

So, if daily integration is so great, why not do it all the damn time?

Continuous Integration

Continuous Integration doesn’t get rid of bugs, but it does make them dramatically easier to find and remove.

— Martin Fowler, ThoughtWorks

Well, that’s the idea behind continuous integration (aka CI). Code is integrated, built, and automatically tested several times a day, or on every check-in, providing immediate feedback to developers.

It need not mean continuous delivery.

More conservative projects will need another layer of

testing done before promoting a build to production;

such a rapid deployment pace could be dangerous.

See also: Implementing CI

Continuous Integration , by Martin Fowler, is a comprehensive piece on CI, including implementation steps.

Best Practices

Continuous Integration, by C. Titus Brown and Rosangela Canino-Koning , “The Architecture of Open Source Applications”

Continuous: Integration: CircleCI vs Travis CI vs Jenkins

Todo

More than a process

Continuous Integration is backed by several important principles and practices.

After integration testing has been completed successfully on the components of a project, whole system testing may begin.

4.2.2.3. System Testing¶

System testing is usually considered appropriate for assessing the non-functional system requirements—such as security, speed, accuracy, and reliability.

— SWEBOK Guide v3, Ch. 4

System testing, sometimes called “end to end” (or E2E) testing, verifies that the different parts (i.e. integrated components) of a complete system work together as designed in a real-world environment. It should be considered a form of black-box testing, without knowledge of internal workings of the software.

System testing should test the whole project end-to-end and verify agreement with requirements and functional specifications. While often largely conducted by a separate Quality Assurance team, developers will need to be aware of and contributing to the process in parallel.

4.2.2.4. Getting Started¶

If you’re just getting started testing, are “curious” about developer testing, and would like to see examples of tests with code, this great half-hour crash course by Ned Batchelder at PyCon is highly recommended. As the Python unittest module is patterned on xUnit (aka jUnit, nUnit, cppUnit etc), the tutorial is broadly applicable.

See also: The Way of Testivus

Testing techniques for the “rest of us” . “Good advice on developer and unit testing, packaged as twelve cryptic bits of ancient Eastern wisdom,” by Alberto Savoia :

|

|

See also: Online Resources

4.2.3. Debugging¶

Debugging is twice as hard as writing the code in the first place. So if you're as clever as you can be when you write it, how will you ever debug it?

— Brian W. Kernighan

Fig. 4.16 A Sweet ‘66¶

Debugging is the process of investigating, resolving, or working-around defects (aka “bugs” ) in software. You’ve probably noticed already that not only are there bugs in code or hardware, but in specs and documentation as well. Further, not all issues discovered will be considered for repair, due to cost, finite resources, frequency, compatibility, or other concerns.

Techniques

It's best to confuse only one issue at a time.

— Kernighan & Ritchie (Unconfirmed)

The first step in debugging is to reproduce the problem, which is not always easy. You’ve got to be systematic, using the scientific method . It’s important to change one thing at a time during construction, troubleshooting, or debugging, in order to avoid unnecessary entanglement or confusion.

Frequently, defects can be diagnosed and corrected informally through simple print statements from the program to standard-out in the console it is run from. In programs of a certain size a logging channel should be available, where messages from components across the code base may be directed. Logging is preferred to the simple print statement for numerous reasons. Messages may be categorized and filtered, sent to the console, log files, system event logs, or centralized network repositories. The program can be put into a mode known as “debug” mode, where verbose informational messages (which would normally be skipped for performance reasons) are output. Logging may be the only way to see what is happening in a remote application, or those that have no user-facing interface, such as a daemon or service.

Debugging Tools

When a truly difficult bug is encountered however, it is time to break out the big guns—a professional debugger or debugging tool, whether standalone or built into an IDE. Choices include line-oriented console debuggers e.g. from the gdb tradition, curses-based full terminal debuggers, and GUI debuggers. All of these tools enable one to step through code as it executes line-by-line, look through stack frames, to inspect and modify variables. Low-level debuggers often allow inspection of core dumps (memory) for processes that have already crashed.

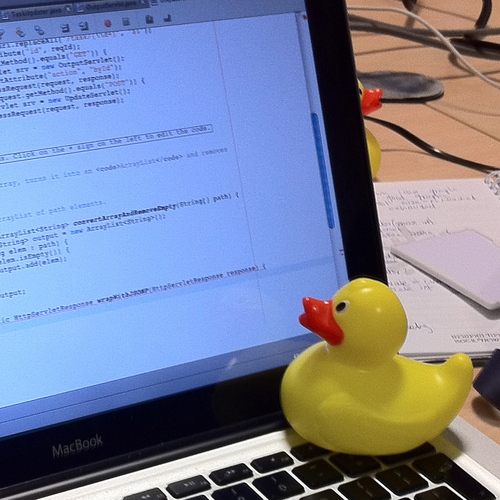

Tip: Rubber Ducks and Chickens

When faced with a difficult problem, the kind one needs professional tools to solve, it can help to explain the situation to a rubber duck or other object that’s willing to listen.

No, hold on a minute… ;-) Here’s an explanation from the cantlug mailing list :

We called it the Rubber Duck method of debugging. It goes like this:

Beg, borrow, steal, buy, fabricate or otherwise obtain a rubber duck (bathtub variety).

Place rubber duck on desk and inform it you are just going to go over some code with it, if that’s all right…

Explain to the duck what you code is supposed to do, and then go into detail and explain things line by line.

At some point you will tell the duck what you are doing next and then realise that that is not in fact what you are actually doing. The duck will sit there serenely, happy in the knowledge that it has helped you on your way.

The process of explaining itself illuminates and clarifies the problem—the first step to finding a solution. The term “rubber duck debugging” is a reference to a piece in the book the Pragmatic Programmer described later in this chapter. How does the duck do its magic? From Wikipedia :

Many programmers have had the experience of explaining a programming problem to someone else, possibly even to someone who knows nothing about programming, and then hitting upon the solution in the process of explaining the problem. In describing what the code is supposed to do and observing what it actually does, any incongruity between these two becomes apparent. More generally, teaching a subject forces its evaluation from different perspectives and can provide a deeper understanding. By using an inanimate object, the programmer can try to accomplish this without having to interrupt anyone else.

See also: Online Resources

Udacity: Software Debugging

4.2.4. Publishing Code¶

I love it when a plan comes together.

— John "Hannibal" Smith, The A-Team

Have no fear—we’ll discuss version control systems at length in a later chapter. The short story if you are not yet aware, is that Professional™ developers use “version control” software to record the progress of their work and share it with others.

“Checking-in” describes the process of saving your work and making it available (publishing) to other developers, a fundamental process of software engineering. This is an important step because if you have broken any part of the existing project (created a regression ) including any build processes, it could affect multiple people and halt their work until the problem can be fixed.

Therefore before checking-in new work, to keep standards high and the team productive, cultivate the following habits at publish time:

Confirm that you’ve included all new referenced files.

Run lint-ing tools, to avoid trivial errors.

Do a rebuild, if necessary.

Run the test suite to find regressions.

Manually test program one last time.

Check your work with a diff tool—you’ll often be surprised what crept in by mistake (or was forgotten).

Finally, if you haven’t yet collapsed from exhaustion, commit and check in (aka push) the code.

That’s it for construction, in the next chapter we’ll discuss software quality.

See also: Books

The Pragmatic Programmer: From Journeyman to Master, Andrew Hunt and David Thomas

Learn from decades of experience in Software Engineering and management, in an easy-to-read style.

TL;DR

K.I.S.S.: “Keep it simple, stupid!”

Always opt for simple and readable techniques over clever ones, you’ll thank yourself later.

Discourage exceptions and tie-up “loose-ends” early before they impede productivity.

While incidental complexity has been slowly reduced over the years, essential complexity remains.

“A class should have only one reason to change.”

“Don’t repeat yourself!”

Organize by the principle of least surprise.

Consider project and team size when making technology and process choices.

There’s a 97% chance you’re wasting time when optimizing without a profiler.

Feedback loops must be of short duration for work to proceed.

Write testable, defensive code; consider TDD and pair programming.

Use a “daily build and smoke test” or CI to reduce integration risk.

Choose a coding standard/guide for your project, and follow it.

Refactoring is important to the long-term health of a project.

Become a master in your chosen toolset, and aware of others.

Hold code-reviews regularly, and be constructive.

Insist on a test suite.

Always run analysis tools and tests before checking-in code.

4.1.9. Social Aspects¶

Contrary to popular belief, professional software development is often a social activity. Developers work in teams and must communicate effectively among themselves, as well as folks in disparate disciplines such as design or business. Techniques for efficient communication are discussed further in the chapter on Project Management.