5. Software Quality¶

A person who knows how to fix motorcycles—with Quality—is less likely to run short of friends than one who doesn't.

— Robert M. Pirsig, Zen and the Art of Motorcycle Maintenance

When we discuss Software Quality, we more often use the original meaning of the word quality, meaning a “characteristic, property, or nature” rather than “superiority, or excellence.” Accordingly, a software product that has achieved an acceptable (agreed upon) level of quality will not be uniformly good, but merely “good enough” in required areas—a state of affairs often surprising to end-users.

Due to business priorities, the quality of a project at shipping time is usually not as high as we might hope. In many industries customers have come to grudgingly expect a ship-now and fix-later development strategy. They’ll often complain about it but inadvertently (and unwittingly) demand it. How’s that, you might ask? By purchasing software based on the feature(s) they need—above all else—with reliability being a secondary concern, if it even occurs to them to ask.

Fig. 5.2 Landing of Alpha One ¶

Unfortunately this approach to software development, while tolerable in low-risk contexts such as consumer software, has become so ubiquitous that it has been employed in critical applications that it should not have been, by inexperienced practitioners unaware that other strategies even exist. Whether due to management and/or engineering ignorance, it has resulted in disasters of various sorts including death. While not all projects have to manage significant liability, this chapter will make the case that quality best-practice starts early, saving time and money in the long run, regardless.

Definitions:

IEEE 730 , the “Standard for Software Quality Assurance Processes” defines (functional) quality as :

The degree to which a system, component, or process meets specified requirements, customer needs, or expectations.

Structural quality on the other hand, refers to internal quality attributes such as maintainability, portability, and adherence to sound software engineering practice. It defines quality assurance as:

A planned and systematic pattern of all actions necessary to provide adequate confidence that an item or product conforms to established functional and technical requirements.

A set of activities designed to evaluate the process by which the products are developed or manufactured.

The subject of Software Quality is a substantial discipline, rivaling Construction or departments of Computer Science in its breadth. Developers must understand the concerns, vocabulary, and processes of quality in order to fully achieve project goals, and to communicate effectively with QA professionals, external testers, project management, clients, and end-users. In this chapter, we’ll cover the “nutshell” version to facilitate that goal.

See also: Online Resources

The Top Five (Wrong) Reasons You Don’t Have Testers, courtesy Joel on Software :

Bugs come from lazy programmers.

My software is on the web. I can fix bugs in a second.

My customers will test the software for me.

Anybody qualified to be a good tester doesn’t want to work as a tester.

I can’t afford testers!

5.1. Considerations¶

For many organizations, the approach to software quality is one of prevention: it is obviously much better to prevent problems than to correct them.

— SWEBOK Guide V3.0 (Ch. 4)

As mentioned during the Requirements phase, the type of project deliverable will heavily affect quality goals and process. The first question is, what are we building? Creating software that must be correct because lives or money are on the line? One-shot space probe never to return? A formal quality process is unavoidable.

Working on a games or other non-essential applications? Much less bureaucracy is called for. However, while it may appear that a QA process may not be needed at all in these cases, note that customers will endure only a low-finite number of problems before abandoning a product (when not locked-in of course).

In other words, from a quality perspective, where does this project land in regards to defect tolerance?

▼ ?

┃◀─── Tolerable ─────────────────────────── Intolerable ───▶┃

2b || !2b # The Question

Do we really need QA? You may have read the recent headline, Yahoo’s Engineers Move to Coding Without a QA Team (ieee.org) Honestly, this may be acceptable, if you have a mature product (consumer web portal) that few depend on for business-critical needs. When choosing this path however, note that the need for QA (in this case) may have lessened but not ceased.

Tip

When QA and testing are needed (they are) but not performed defects do not simply disappear as we’d like them to—rather they are shifted into the laps of the dev-team and ultimately, customers.

See also: Online Resources - Vitamin QA

If your team doesn’t have dedicated testers… you are either shipping buggy products, or wasting money by having $100/hour programmers do work that can be done by $30/hour testers. Skimping on testers is such an outrageous false economy that I’m blown away that more people don’t recognize it.

— Joel Spolsky, Blogger/co-founder StackExchange

5.1.1. Quality Dimensions¶

The measure of your quality as a public person, as a citizen, is the gap between what you do and what you say.

— Ramsey Clark, Former Atty. General

When assessing quality, a number of factors need to be considered. These software quality factors (ISO/IEC 25010 and the older ISO/IEC 9126 ) largely overlap with design considerations discussed previously. They’ve been broken down into internal and external categories, with Consortium for IT Software Quality (CISQ ) defined characteristics shown in bold:

|

|

Data Quality

Ben Ettlinger is a friend of mine who illustrates the importance of data quality and therefore the data model, with a story about how NASA lost a $125 million Mars orbiter because one engineering team used metric units while another used imperial for a key spacecraft operation.

The logical data model [specification] can minimize or eliminate data quality issues such as this.

— Steve Hoberman, author "Data Modeling Made Simple"

A brief reminder that the quality of our data and data modeling can carry as much importance as our code.

5.1.2. Quality Assurance vs. Control¶

Quality assurance is process oriented and focuses on defect prevention, while quality control is product oriented and focuses on defect identification.

— Diffen.com

Quality Assurance

As defined at the start of the chapter, Quality Assurance (QA) is a proactive strategy, promoting quality best practice from the start of a project through the Requirements, Design, and Construction phases of the SDLC. Auditing of the development process is also performed, to assure “stakeholders” that requirements and quality plans are adhered to.

Techniques such as reviews of design and code are useful to confirm that work observes organizational and project standards. Steve McConnell, in the column, “Software Quality at Top Speed” argues :

Technical reviews are a useful and important supplement to testing. Reviews find defects earlier, which saves time and is good for the schedule. They are more cost effective on a per-defect-found basis because they detect both the symptom of the defect and the underlying cause of the defect at the same time.

For best results, technical reviews should include QA engineers as well as developers.

Quality Control

Test: the means by which the presence, quality, or genuineness of anything is determined; a means of trial.

— Dictionary.com

Quality Control (QC), on the other hand is reactive strategy; and is also known as inspection or testing. The software is searched for defects, the bulk of which happens later towards the middle to end of the project as functionality nears completion. Ideally, all programming errors should be detected before software is released to the customer.

Unlike developer testing during the Construction phase, which aims to prove that the software works correctly, the goal of testing here is to “break things,” in an professional but adversarial manner. Once identified, defects and statistics are reported to stakeholders and management as a foundation for informed decision making.

5.1.3. Motivation¶

High-quality software is not expensive. High-quality software is faster and cheaper to build and maintain than low-quality software, from initial development all the way through total cost of ownership.

— Capers Jones, The Economics of Software Quality

Motivations for improved quality include cost containment, risk management, and pride in workmanship. We’ll look at quality improvements as a strategy to raise customer, employee, and developer satisfaction.

5.1.3.1. Cost Containment¶

Most defects end up costing more than it would have cost to prevent them. Defects are expensive when they occur, both the direct costs of fixing the defects and the indirect costs because of damaged relationships, lost business, and lost development time.

— Kent Beck, Extreme Programming Explained: Embrace Change

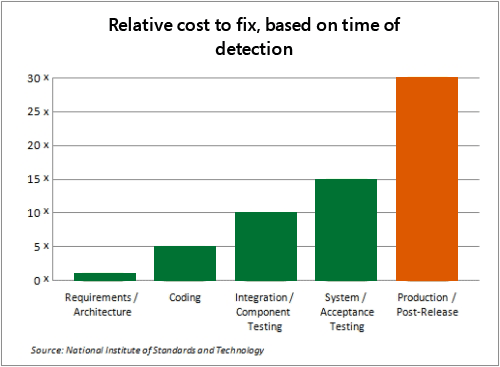

History has shown that while finding defects by testing after Construction is inefficient, fixing them in the field is incredibly more costly—by an order of magnitude or two. Therefore the prime-directive of the discipline of Software Quality (and Engineering) is to get defects found and fixed as soon as possible. Consider the “cost to fix” graph below, sourced from the US NIST :

Fig. 5.3 “An ounce of prevention is worth a pound of cure,” courtesy Microsoft and NIST (see note below)¶

Warning: Propellerhead Alert!

The cost of bug-fixing follows a similar curve to hardware memory-access times . When we obviate (prevent) a bug in the Design phase, it’s like accessing information from a lightning-quick CPU register. If we fix it during the Construction phase, we’re grabbing from middling main-memory. Fixing in the field however, is like accessing information from a glacially-slow hard-disk. It takes a lot longer (eons in computer time) to pull the necessary bug-fixing information back into skulls months after the fact, than it would have earlier.

Why is this the case? Joel Spolsky in his piece, Twelve Steps to Better Code explains in a more accessible manner:

In general, the longer you wait before fixing a bug, the costlier (in time and money) it is to fix. For example, when you make a typo or syntax error that the compiler catches, fixing it is basically trivial.

When you have a bug in your code that you see the first time you try to run it, you will be able to fix it in no time at all, because all the code is still fresh in your mind.

If you find a bug in some code that you wrote a few days ago, it will take you a while to hunt it down, but when you reread the code you wrote, you’ll remember everything and you’ll be able to fix the bug in a reasonable amount of time.

But if you find a bug in code that you wrote a few months ago, you’ll probably have forgotten a lot of things about that code, and it’s much harder to fix… By that time you may be fixing somebody else’s code, and they may be in Aruba on vacation, in which case, fixing the bug is like science: you have to be slow, methodical, and meticulous, and you can’t be sure how long it will take to discover the cure…

He continues on to note how the very unpredictability of the time needed to fix latent defects can wreak havoc on schedules, another important reason to squash them early.

See Also: Sacred Cows

In this interesting piece at LessWrong and book “The Leprechauns of Software Engineering” , developer/consultant Laurent Bossavit slaughters the sacred cows of software engineering, from the “increasing cost of defects,” to the “10x Developer” and beyond—making the case that many famous foundational studies are deeply flawed and reinforced by citogenesis , the process where study citations loop upon themselves to become “truth.” The controversial pieces have received some “push back” of their own as well :

Bossavit seems to be aspiring to some academic ideal in which the only studies that can be cited are those that are methodologically pure in every respect. That's a laudable ideal, but it would have the practical effect of restricting the universe of allowable software engineering studies to zero.

— Steve McConnell

While it has matched this author’s experience that time wasted on defects rises according to their distance in space and time from creation (there are other factors of course), let’s concede that much software engineering knowledge is built on sand. Make your own decision on these topics, but be aware this rabbit hole is particularly deep .

See also: Move Your Bugs to the Left

To the left, to the left…

— Beyoncé, "Irreplaceable"

Move Your Bugs to the Left, i.e.: earlier, by samwho.co.uk:

Compiler ⏴ Tests ⏴ Code Review ⏴ QA/Beta ⏴ User

As, noted previously, this book’s recommendation is to move them even farther to the left. ;-) This is a great nuts and bolts discussion of the process, however.

5.1.3.2. Risk Management¶

Narrator: A new car built by my company leaves somewhere traveling at 60 mph. The rear differential locks up. The car crashes and burns with everyone trapped inside. Now, should we initiate a recall?

Take the number of vehicles in the field, A, multiply by the probable rate of failure, B, multiply by the average out-of-court settlement, C. A times B times C equals X.

If X is less than the cost of a recall, we don't do one.

— Chuck Palahniuk, Fight Club

An automotive recall is a consummate example of how costly fixes done in the field can be. Ponder the 2015 Fiat/Chrysler hacking incident, where attackers were able to easily take control of Jeep vehicles over the air due to negligent security practice.

Risk Management includes the identification, prioritization, and handling of risks detrimental to the success of a software project. Such risks may be avoided, mitigated, or accepted when appropriate. These risks include:

Technical risks, such as changing requirements, poor preparation, poor execution, or implementing on top of unproven technology.

Schedule/Budget risks, which may stem from technical risks above, poor planning and estimates, or other causes.

Liability risk, which includes any potential liability should data-breach or disasters of other sorts occur.

Maintaining software quality is an important part of managing risks. We’ll discuss risk further in the project management challenges chapter.

5.1.3.3. Pride in Workmanship¶

Quality, far beyond that required by the end user, is a means to higher productivity.

— DeMarco/Lister, Peopleware: Productive Projects and Teams, 3rd Ed., Ch. 4

In the book Peopleware (Ch. 4) “Quality, If Time Permits,” authors DeMarco and Lister discuss the often unnoticed impact of quality on team productivity. While the builders of a product often desire to construct and maintain a higher-quality product than the market requires, that desire is also a powerful force that motivates:

We all tend to tie our self-esteem strongly to the quality of the product we produce—not the quantity of product, but the quality. (For some reason, there is little satisfaction in turning out huge amounts of mediocre stuff, although that may be just what’s required for a given situation.)

The reverse is also stated:

Any step you take that may jeopardize the quality of the product is likely to set the emotions of your staff directly against you.

Unsurprisingly, a team will pull together and work a lot harder for a product they’re proud of—less so for a piece of shit. Due to fewer defects and a more robust supporting environment, they will also work more efficiently, negating additional costs. Chapter 28 adds, “People get high on quality and outdo themselves to protect it.”

5.2. Roles and Responsibilities¶

Testing is no longer seen as an activity that starts only after the coding phase is complete with the limited purpose of detecting failures.

— SWEBOK Guide V3.0, Ch. 4

Indeed, the quote above helps explain why this chapter is not named “testing” or “quality assurance,” after the department it is principally associated with. As mentioned, quality planning must start from the beginning and is the responsibility of everyone on the team, not a single department or individual. The roles below drive the final quality of a software project in the following ways:

Customers and industry state non-functional requirements.

Architects design software to meet quality goals.

Developers construct for verification, code to standards, use analysis tools, and write unit/integration tests.

QA professionals critique design, monitor the construction phase, plan, test, and report on current project status.

Project managers schedule time, attention, and “rally the troops” in support of quality as an internal voice of the customer.

5.2.1. QA Department¶

That's Tron. He fights for the Users.

— Untitled Program

The Quality Assurance (QA) department should be thought of as an unbiased partner in the goal of creating great software—an organizational sanity check if you will—and not the source of quality in a project. QA consults, investigates, and reports on quality, it does not create it.

Accordingly, software with a baseline-level of quality should arrive at the doorstep of this phase already having been through stages of design, review, and developer testing before it lands. The Quality/Testing phase of the software lifecycle (in a well-managed project) should focus on the discovery of non-obvious defects and edge-cases . For example, “obscure option X conflicts with minor feature Y,” rather than “crashes at startup.” Otherwise, significant time and money are being wasted on high-latency communication turnaround.

Warning: Doing it Wrong

If a dev-team is “throwing code over the wall” to QA that has not been verified properly beforehand, whether due to external pressure, disorganization, to avoid responsibility, or worse—it can be stated definitively that the organization is “doing it wrong.” New code must be delivered with a base level of testing, lest it waste everyone’s time.

Department Responsibilities:

Management of cost and risk, through implementation of quality best practice.

Monitoring of the development process to flag issues.

Testing

Reporting on project status, answering questions, and providing information for decision-making.

Personnel:

A QA Manager will focus on standards, documentation, planning, mentoring, and team-building.

SQA Engineers are developers that specialize in quality aspects, focusing on design, standards, code review, and test-suite automation.

SQA Analysts may perform focused planning, but manual and automated testing of the product are their main tasks. Other aspects of the job include research of as yet undocumented functionality, and speaking up for the end-user during the development process.

See Also: Golden Ratio

You are going to need 1 tester for every 2 programmers (more if your software needs to work under a lot of complicated configurations or operating systems).

— Joel Spolsky, Blogger/co-founder StackExchange

Regarding the age-old question, what is the ideal ratio of developers to testers? As always, it is “it depends” and is typically “several”. The question is explored in these two questions from StackExchange.

Autonomy

It’s important that the QA department have an equal say at the table between project and engineering management and not report to them . Otherwise, it’s too easy to bully the team into shipping when quality objectives have not yet been met. It will happen as soon as deadline pressure occurs, so plan ahead by not putting your QA team into a subservient position.

Other often overlooked issues include decent career path and working conditions for QA personnel.

5.2.2. Black Team, The¶

From the book Peopleware (Ch. 22) we have the story of the “Black Team,” a merciless gang of QA Professionals likened to Ming the Merciless. While the story is recounted to illustrate a “jelled” team (a top performer due to high-morale), it also nicely describes the QA ethos:

Pitiful Earthlings, What Can Save You Now?

At first it was simply a joke that the tests they ran were mean and nasty, and that the team members actually loved to make your code fail. Then it wasn’t a joke at all. They began to cultivate an image of destroyers. What they destroyed was not only your code but your whole day. They did monstrously unfair things to elicit failure, overloading the buffers, comparing empty files, and keying in outrageous input sequences. Grown men and women were reduced to tears by watching their programs misbehave under the demented handling of these fiends. The worse they made you feel, the more they enjoyed it. To enhance the growing image of nastiness, team members began to dress in black (hence the name Black Team). They took to cackling horribly whenever a program failed. Some of the members grew long mustaches that they could twirl in Simon Legree fashion. They’d get together and work out ever-more-awful testing ploys. Programmers began to mutter about the diseased minds on the Black Team.

Of course, the company was quite pleased. Every bug the team found was one that their customers wouldn’t.

5.2.3. Total Quality Management (TQM)¶

TQM describes a management approach to long–term success through customer satisfaction.

— ASQ (American Society for Quality)

Total Quality Management (TQM), is a group of existing techniques named so by the US Navy, which emphasizes a climate of continuous improvement and quality as the responsibility of everyone involved.

TQM is largely built on the pioneering work of statistician W. Edwards Deming , known for his great influence on the manufacturing successes of post-WWII Japan, which transformed itself from the ashes to become a leader in the world economy within a few short decades. In his book, Out of the Crisis, Deming described fourteen principles for improving business effectiveness. Listed below are those most applicable to software project management:

Cease dependence on inspection to achieve quality. Eliminate the need for massive inspection by building quality into the product in the first place.

Improve constantly and forever the system of production and service, to improve quality and productivity, and thus constantly decrease costs. (See kaizen )

Eliminate slogans, exhortations, and targets for the work force asking for zero defects and new levels of productivity. Such exhortations only create adversarial relationships, as the bulk of the causes of low quality and low productivity belong to the system and thus lie beyond the power of the work force.

Eliminate numerical quotas and goals.

Remove barriers that rob the hourly worker of his right to pride of workmanship.

Institute a vigorous program of education and self-improvement.

Put everybody in the company to work to accomplish the transformation. The transformation is everyone’s job.

The heavyweight ISO 9000 and the Six-Sigma data-driven approaches follow TQM’s lead. You’ll see these management themes repeatedly in the following sections.

The trade-off between price and quality does not exist in Japan. Rather, the idea that high quality brings on cost reduction is widely accepted.

— D. Tajima and T. Matsubara, “Inside the Japanese Software Industry,” Computer, Vol. 17 (March 1984)

5.3. Process¶

Program testing can be used to show the presence of bugs, but never to show their absence!

— Edsger W. Dijkstra

Fig. 5.6 Sub-steps of the Quality phase¶

Now that we’ve discussed the importance and considerations of Software Quality, it’s time to get to work. A high-level overview of the Quality process is listed below, each followed roughly with where they correspond in time to the SDLC:

Planning - Requirements, Design

Documenting - Design, Testing

Testing - Overlaps Construction and Testing

Note that quality activities encompass the entire software development life cycle and run in parallel to it. Prior to Planning, the project’s quality goals will be defined during the Requirements phase. Consultation with QA personnel in such matters would be favorable.

Egoless Development

It’s important that the team take responsibility over the project as a whole and work together to improve quality. A favorable attitude toward failure discovery and correction, with criticism directed at software or process rather than individuals, goes a long way this regard to keep the team working effectively.

5.3.1. Quality Planning¶

Quality is never an accident;

it is always the result of intelligent effort.

— John Ruskin (Unconfirmed)

The QA activities that need to be planned upfront include the following:

Understanding—internalization of project requirements, specs, organization policy, and applicable regulation, using documentation prerequisites for reference.

Analysis of:

Costs vs. benefits

Risks and costs of failures

Selection of types, depth, and quantity of testing found appropriate during analysis.

Determination of resource requirements

Coordination of personnel

Scheduling of facilities and equipment

Definition of policy and strategy

Definition of roles and responsibilities

Scheduling of activities.

The resulting documentation, often called the Test Plan includes what will be tested, and why. Below are a number of resources with detailed guidance.

See also: Online Resources

Defining a Quality, Quality Plan, presentation courtesy Construx consultants.

How to Write a Great Software Test Plan, by Robert Japenga

Test Plan Fundamentals

5.3.1.1. Test Selection¶

An effective test automation strategy calls for automating tests at three different levels, as shown in the figure below, which depicts the test automation pyramid.

— Mike Cohn

Previously, under Developer Testing we began to explore quality concerns during a project’s Construction phase—from the developer’s viewpoint. As you may remember, we organized our tests into three specific categories of granularity, a common way to classify them:

Unit or component testing

Integration testing, between units

System, or E2E testing

In this section we return to test granularity to consider it from a larger project and quality perspective.

Test Pyramid

One of our primary quality planning responsibilities is selection of the “types, depth, and quantity” of testing appropriate for the project’s requirements. Let’s step back a moment to think about what decisions will be made here. How might we prioritize each category?

Fig. 5.7 Testing Pyramid¶

Well, that’s where the “test pyramid” comes in—a natural consequence of the scale of the tests we’ll require.

Recall that proper unit tests are highly focused, check one unit of functionality at a time, need little set up time, and optimally need very little time to execute. A well run project will undoubtedly have shitloads of these.

In contrast, integration tests are more time consuming all around, due to their need to validate larger subsystems, comprised of multiple units each. From authoring, to setup, to execution—everything takes a bit longer. Not surprisingly, end to end testing for whole systems takes even longer. These tests will be the most lengthy and therefore least numerous of the bunch. Use sparingly but don’t forget them—they are essential for “smoke tests,” and all-around sanity checks.

Finally, note the small dollop of manual testing at the peak of the pyramid. This should be the point where expensive, clumsy, easily bored yet insightful humans get involved. Avoid boring them with extensive manual testing or insight will shut off like a light switch.

It's not a magic way to save money -- the developers obviously end up spending time writing tests. But the long-term value of those tests is cumulative, whereas the effort spent on manual testing is spent anew *every* release.

— ef4 at HN

This model presupposes management has had the forethought to plan ahead of course. As we’ve seen, a commitment to comprehensive automation is an investment, but one with a high return (or ROI ). Well, that’s the theory anyway. At most shops you’ll see something different.

Warning: 🍦 Ice Cream Cone

Back to life, back to reality… Back to the here and now, yeah

— Soul II Soul

Fig. 5.8 We all scream…¶

Other projects, run by less organized or less experienced folks will often concentrate their time on manual testing, never getting around to automating a test suite. Unknowingly, they’ve built the so-called and dreaded “ice cream cone” instead. Imagine an upside down test-pyramid, with a large scoop of inefficient manual testing heaped on top. ☺ They’ll get less done, have more bugs, worry more, suffer insomnia, and come to avoid innovative features and helpful refactors for fear of breaking things.

See also:

5.3.1.2. Timeline¶

Testing is what many people think of when they think of software quality assurance. Testing however, is only one part of a complete quality-assurance strategy, and it’s not the most influential part. Testing can’t detect a flaw such as building the wrong product or building the right product in the wrong way. Such flaws must be worked out earlier than in testing—before construction begins.

— Steve McConnell, Code Complete (Ch. 3)

In the book Code Complete, there is an interesting discussion regarding what quality means at different times of the project. In agreement with TQM, McConnell makes the case that when a project focuses its quality efforts at the start of a project, it’s possible to require and design high-quality into it. For example, a Casio wristwatch can’t be tested into a Rolex , no matter how much effort is expended.

In the middle of the project, we look to developer testing to promote correctness, while at the end, system testing is the focus.

Optimal Quality

To find the optimal quality of a product, we ask the fundamental question, what is the highest quality that can be achieved at the lowest cost? First we estimate the costs of various levels of defect prevention, then missed targets. Finally, we compare them.

The end product must factor into the equation, of course. Would we be able to recoup millions of dollars in extensive testing for a cheap commodity consumer product? No, probably not. The idea that quality targets must be appropriate to the application is also known by the phrase, fitness for purpose, rather than an absolute standard.

As Steve McConnell concludes in Code Complete (Ch. 20):

Quality is free in the end, but it requires a reallocation of resources so that defects are prevented cheaply instead of fixed expensively.

See also: Online Resources

Testing Space

Software testing consists of the dynamic verification that a program provides expected behaviors on a finite set of test cases, suitably selected from the usually infinite execution domain.

— SWEBOK V3, Ch. 4

Wyld Stallyns - promoting excellence. ♪

As noted at the beginning of the software lifecycle, perfection is never fully achieved—it’s an endless job. The list of things that can go wrong is effectively infinite ; making complete test coverage of a program impossible. Roger S. Pressman, Esquire , in his classic tome on Software Engineering states:

Exhaustive testing presents certain logistical problems… Even a small 100-line program with some nested paths and a single loop executing less than twenty times may require 10 to the power of 14 (100 trillion) possible paths to be executed.

Termination - When to Stop

The operation was a success, but the patient died.

— Anonymous

Consequently, decisions of process and approach must always take economic factors into consideration with regard to how long to continue. Termination, or “when to stop” is an important decision of the testing process. Accordingly, finite resources must be focused on high priority and frequently-occurring issues first. As each round of testing occurs, quality converges upon the agreed-upon confidence level found acceptable by all parties. When it is time to stop testing and “ship”, it is because the team has decided that all known defects have been fixed or postponed, and rate of discovery has started to wane.

5.3.2. Documentation¶

QA documentation is both an input to and the end-product of the Testing phase, and therefore occurs throughout the lifetime of a project.

- Planning phase:

The Test Plan discussed above is drafted, approved, and referred to during testing.

- Testing phase:

Test Cases: the tests that will be attempted, see below.

Test Logs: when test was conducted, by who, with what configuration, and results.

Defect/bug/enhancement reports

- Completion documents:

Test Summary: analysis of logs and conclusion regarding launch readiness.

Test Cases

A test case is a documented set of conditions and inputs under which it is determined that a unit of software works as designed and requirements are met. Test case documentation should include all necessary details so they can be picked up and run by anyone. Think reproducibility in the scientific method sense.

Testing targets comprise:

Errors, or mistakes in the program code produced by developers.

Faults, an instance of an error being executed, commonly known as a bug.

Failures, occur when the system is unable to perform a required task.

See Also: Writing Good Test Cases

On Test Cases , courtesy Software Testing Fundamentals:

As far as possible, write test cases in such a way that you test only one thing at a time. Do not overlap or complicate test cases. Test cases should be “atomic” and reproducible.

Ensure that all positive scenarios and negative scenarios are covered.

Language:

Write in simple and easy to understand language.

Use active voice: Do this, do that.

Use exact and consistent names (of forms, fields, etc).

Characteristics of a good test case:

Accurate: Exacts the purpose.

Economical: No unnecessary steps or words.

Traceable: Capable of being traced to requirements.

Repeatable: Can be used to perform the test over and over.

Reusable: Can be reused if necessary.

5.3.3. Testing¶

Testing is a destructive, even sadistic process, which explains why most people find it difficult.

— Glenford J. Myers, The Art of Software Testing

Testing is the phase where the software is exercised and inspected in anticipation of finding mistakes and defects of various sorts. While testing, we’ll investigate what has been built, how it is being built, and current project behavior. Once determined, the findings are reported.

Fig. 5.12 The horn o’ plenty.¶

The Testing phase has a different emphasis than developer testing—here we’re wanting to find errors. In “The Art of Software Testing”, author Glenford Myers elaborates:

A good test case is a test case that has a high probability of detecting an undiscovered error, not a test case that shows that the program works correctly.

As complex software is so hard to get right, you can expect a veritable cornucopia of bugs—an abundant, fruitful, bountiful, and other synonyms for abundant harvest.

5.3.3.1. Techniques¶

Each new user of a new system uncovers a new class of bugs.

— Brian Kernighan, Bell Labs

A number of approaches may be used to test the software, including:

Categories and Types:

Manual and automated/scripted testing

Simulations, prototypes, models

Mathematical poofs of correctness, for math or CS-heavy projects.

Functional or black-box , testing without knowledge or regard to internal implementation.

Structural or white-box (or gray), testing with internal knowledge.

Smoke testing , a quick “once over” of a build to determine whether is should be tested further.

Ad-hoc testing, based on intuition and experience.

A QA Engineer walks into a bar. Orders a beer. Orders 0 beers. Orders 999,999,999 beers. Orders a lizard. Orders -1 beers. Orders a sfdeljknesv.

— Bill Sempf

Boundry testing, i.e.: Checking at the “the extremes of the input domain.” See above quote.

Levels of testing, and who performs them:

Fig. 5.13 What the Beta testers received… ¶

Unit testing (Developers)

Integration testing (Developers/QA)

System testing (QA), encompassing the following:

Alpha testing (Developers)

Functionality

Internationalization (i18n) and localization (l10n) testing , performed by QA’s i18n team or externally.

Including correct handling of Unicode input and output.

Regression testing , to confirm previous functionality has not broken.

Performance/stress

Portability

Security, and fuzz testing

Usability and HCI testing

Field testing (aka External Beta testing)

Acceptance testing (by Clients or Users)

Test Coverage

From time to time I hear people asking what value of test coverage (also called code coverage) they should aim for, or stating their coverage levels with pride. Such statements miss the point.

— Martin Fowler

Fig. 5.14 Coverage—Yes, no, maybe so.¶

Test (or Code) coverage is a measure of how much of the project code is covered by tests, typically given as a percentage, with higher numbers implying higher quality software. Criteria for measurement includes :

Function coverage

Statement coverage

Branch coverage

Condition coverage

Many more…

Warning: Coverage In Moderation

When measurements become targets, they encourage gaming.

— Sriram Narayan, Author

A thoughtful discussion by Martin Fowler on why aiming for 100% test coverage can be counter-productive.

“Testivus On Test Coverage” , another gem by Alberto Savoia. “The young apprentice and the grizzled great master finished drinking their tea in contemplative silence.”

5.3.3.2. Execution¶

It is perhaps obvious but worth recognizing that software can still contain faults, even after completion of an extensive testing activity.

— SWEBOK V3, Ch 4

Here the “test suite,” or entire collection of test cases, is executed. Each case is run and the results logged, whether performed automated or manually. Issues found must be entered into a bug-tracking database for review, tracking, and analysis, lest they be forgotten.

Bug Reports

To minimize communication lag,

a bit of time must be invested in each bug to understand and document it fully

enough for others to tackle.

When writing bugs,

think documentation.

Don’t assume your reader knows every technical detail about the project.

For example,

a project manager may need to triage issues,

or maintenance could be outsourced to another team.

Though we might wish otherwise, bugs are a fact of life in software development. Unfortunately for developers, so are unhelpful and infuriating bug reports.

Bad bug reports waste time and money while everyone goes back and forth to clarify the necessary details.

— viget.com

Good bug reports must, at a minimum:

Should not be a duplicate; search the existing before creating new ones.

Have a concise summary

Have a specific factual details:

What was expected, and what happened.

Precise steps to reproduce

Version information

Platform information (if necessary)

Severity

5.3.3.3. Tools¶

There are a large number of important and helpful tools to assist in the quality assurance process, including:

Debuggers

Coverage analysis tools

System tools and tracers, such as strace and dtrace, win/sys/internals

Network monitors, such as Wireshark , “Go Deep.”

Web capture and replay tools, notably Selenium .

Test harnesses , containers, and virtual environments .

and many more

5.3.3.4. Mildly Irritated Props¶

Mother… said, "Somebody has to Clean all this away. Somebody, SOMEBODY has to, you see."

Then she picked out two Somebodies. Sally and me.

— Dr. Seuss, The Cat In The Hat Comes Back

During testing execution, QA personnel must juggle multiple tasks at once, such as testing, investigating and writing new bugs, verifying old bugs, performing legwork to figure out undocumented features and explaining them to others (ideally writing wiki pages to avoid that in the future). More generally, they’ll be managing truckloads of ambiguity and chasing organizational fires.

Somebody’s gotta do it.

See also: Online Resources

The Unique Role of Continuous Integration in Quality Assurance

“Continuous Integration (CI) systems have dramatically cut down on overhead and testing time while improving effectiveness and reliability of test cases.”

Tip: Software Testing Antipatterns

Software Testing Anti-Pattern List,

From the Codepipes Blog, by Kostis Kapelonis.

Having unit tests without integration tests

Having integration tests without unit tests

Having the wrong kind of tests

Testing the wrong functionality

Testing internal implementation

Paying excessive attention to test coverage

Having flaky or slow tests

Running tests manually

Treating test code as a second class citizen

Not converting production bugs to tests

Treating TDD as a religion

Writing tests without reading documentation first

Giving testing a bad reputation out of ignorance

5.3.4. Maintenance¶

As mentioned previously (snooze), quality-related documents need to be kept updated throughout the project life-cycle, as requirements, designs, and testing plans change, and lessons are learned.

See also: Books

The Art of Software Testing, 3rd Edition

by Glenford J. Myers, Sandler, & Badgett

“The classic, landmark work on software testing.”

This is one of the only comprehensive, well-written,

and updated books on Software Quality.

Testing Computer Software, 2nd Edition,

by Kaner, Falk, and Nguyen.

Note: Both these books, while full of timeless advice are getting a bit “long in the tooth” with their examples. If you don’t mind “partying like it’s 1999 ,” they should serve you well.

See also: Online Resources

Software Testing Fundamentals (STF)

A website dedicated to improving basic knowledge in the field of Software Testing. “Our goal is to build a useful repository of Quality Content on Quality.”

Software Testing Glossary

The Software Quality Assurance & Testing Stack Exchange is a question and answer site for software quality control experts, automation engineers, and software testers.

ISO 9000 - Quality Management ,

courtesy iso.org (think BIG projects)Software Testing: How to Make Software Fail,

an online course by Udacity. Seven Basic Tools of Quality,

“a designation given to a fixed set of graphical techniques identified as being most helpful in troubleshooting issues related to quality.”

Wrapping Up

Before the risin' sun, we fly

So many roads to choose

We'll start out walkin' and learn to… run

(And yes, we've just begun) ♪

— Carpenters

As you can see, we’ve only just begun learning about the subject of Software Quality; consult the resources in this chapter to explore further. We’ll discuss the release and maintenance of projects in the next chapter.

TL;DR

Functional quality is the degree to which a system, component, or process meets specified requirements, customer needs, or expectations.

Structural quality refers to internal quality attributes such as maintainability, portability, and adherence to sound software engineering practice.

Developers must understand the concerns, vocabulary, and processes of quality to communicate them with others.

The ship-now and fix-later approach to software is overused and costly to all concerned.

“Most defects end up costing more than it would have cost to prevent them.”

Software Quality is the responsibility of everyone involved on the project.

As testing could continue indefinitely, it is important to plan the criteria for termination beforehand.

“Quality Assurance is process oriented and focuses on defect prevention.”

“Quality Control is product oriented and focuses on defect identification.”

Planning for software quality must start from the beginning of the software life cycle.

A project can’t be tested into high-quality, it must be designed in from the beginning.

To be effective, the QA department should have an equal say at the table between Project and Engineering management and not report to them.

Test cases should be documented clearly enough to be performed or replicated by others.

Time must be invested in each bug to understand and document it fully enough for others to tackle.

Quality-related documents need to be kept updated throughout the project life cycle.